Markup AI plugs into your tools and workflows (LLMs, CMS, Zapier, etc.) to automatically check every piece of content against your custom guidelines, flag issues, and provide on-brand rewrites at scale.

Hey Adopter,

Nine out of ten companies claim they trust AI to generate content. Then they manually review every single piece before publishing.

Something isn’t adding up.

I sat down with Matt Blumberg, CEO of Markup AI and a veteran of four technology companies over 30 years, to dig into this contradiction. You can watch our full conversation in the video above.

A recent survey from Markup AI of 266 C-suite and marketing leaders found that 92% of organisations use significantly more AI for content than a year ago. Half of all enterprise content now involves generative AI in some capacity. The engines are running.

The problem? Human review has become the new choke point. Eighty percent of organisations still rely on manual checks or spot reviews to verify AI output. Content piles up. Review cycles stretch. Teams wait.

Matt put it bluntly during our conversation:

“It’s a Ferrari with bicycle brakes.”

The trust gap nobody talks about

Here’s the uncomfortable truth. Ninety-seven percent of leaders believe AI models can check their own work. But when it’s time to hit publish, they don’t act like it.

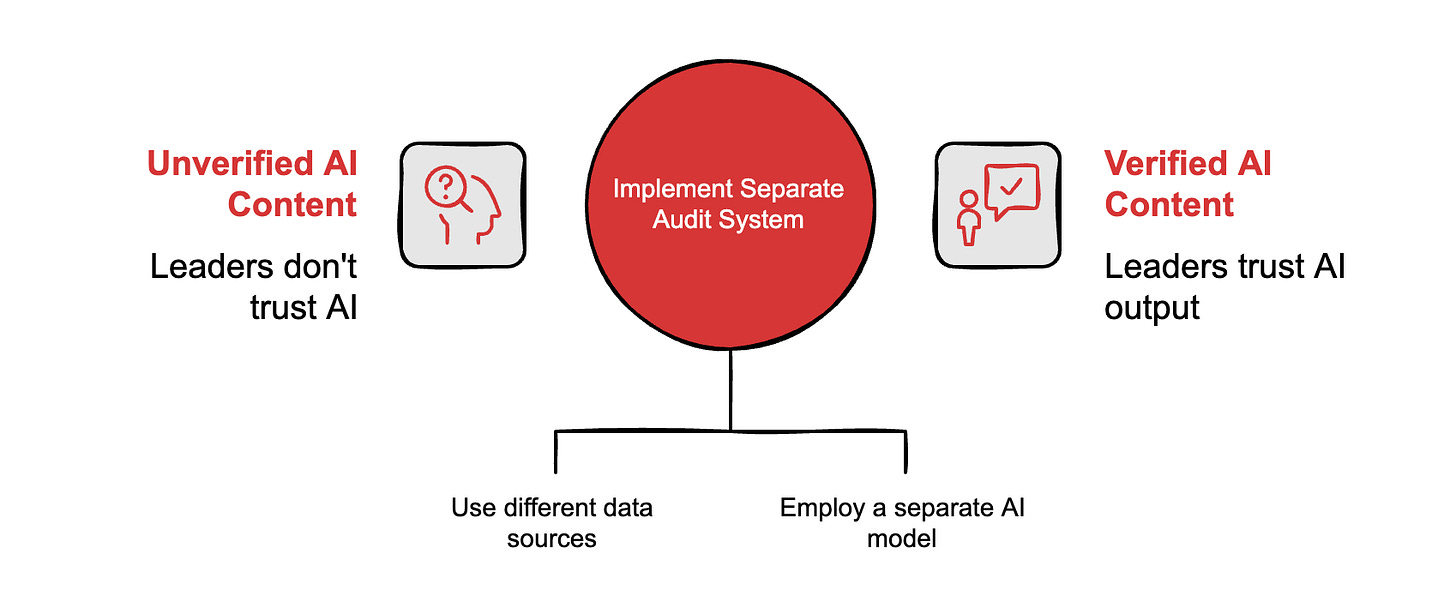

Matt explained the core issue in our conversation. Large language models are predictive machines. They don’t verify facts against reality, they generate probable text based on patterns. “Ask ChatGPT to correct its own hallucination,” he said, “and it says ‘you’re absolutely right’ and absorbs your correction. It can’t check its own output.”

One system can’t create content and audit that content at the same time. The inputs that shaped the output are the same inputs that would evaluate it. You need a separate system with different inputs and a different architecture.

This isn’t a minor technical point. It’s the reason your content teams are stuck.

Who owns this problem, exactly

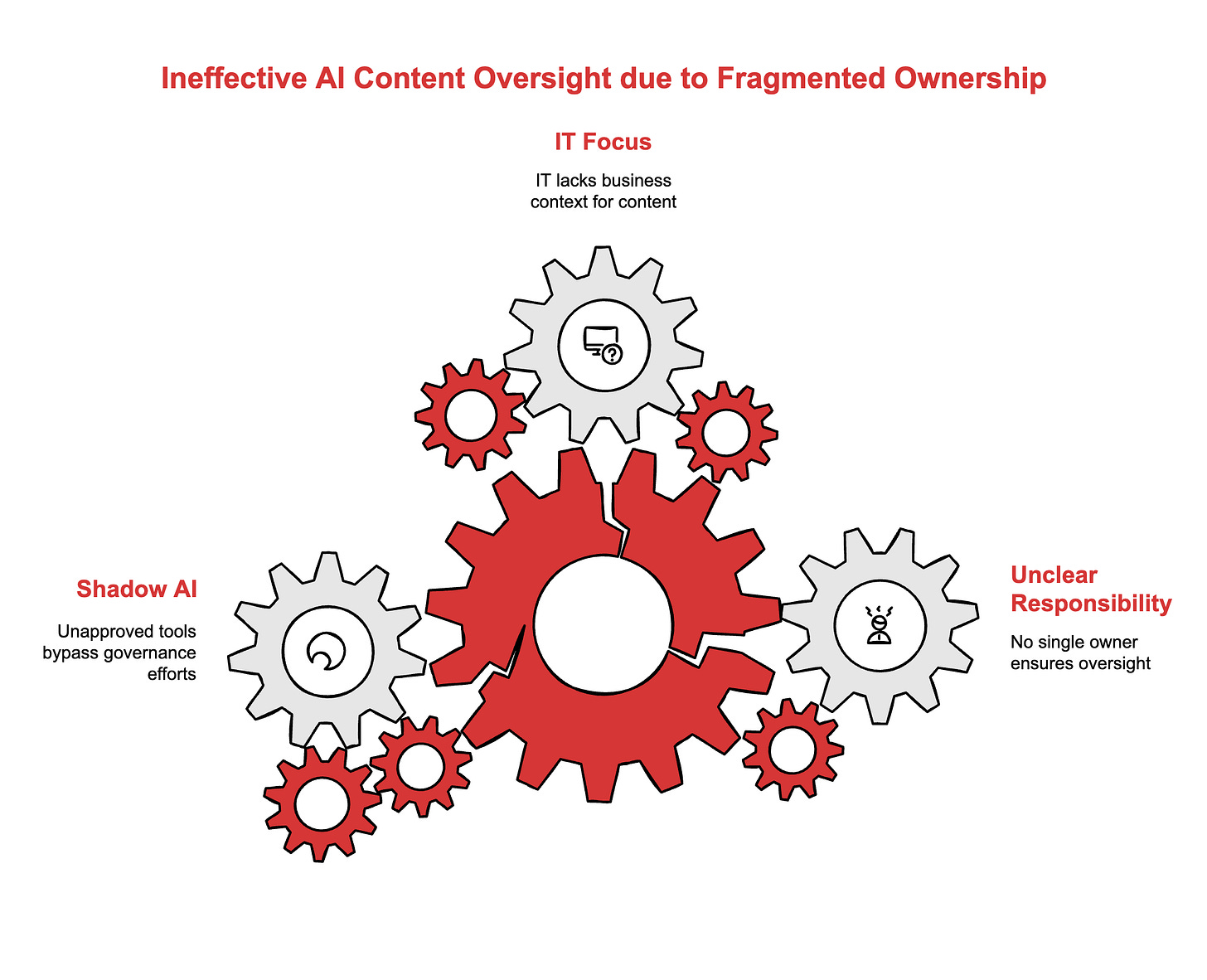

The survey revealed fragmented ownership. Forty percent say IT is primarily responsible for AI content oversight. Thirty percent say marketing. Twelve percent point to an AI committee. Nine percent name compliance. Two percent say legal.

Only eight percent view it as a shared responsibility.

When I asked Matt what breaks when nobody owns the oversight, his answer was direct. “If nobody owns it, it doesn’t get done. If everybody owns it, nothing gets done. Everyone thinks someone else is handling it.”

He made another point that stuck with me. IT typically steps in because they understand the technology. But they don’t live in the business the way content creators do. They don’t know the brand voice nuances or regulatory requirements that a compliance officer would flag in two seconds.

Meanwhile, employees aren’t waiting for permission. Seventy-nine percent of organisations admit their teams use multiple LLMs or unapproved AI tools. Shadow AI is already fragmenting whatever governance you thought you had.

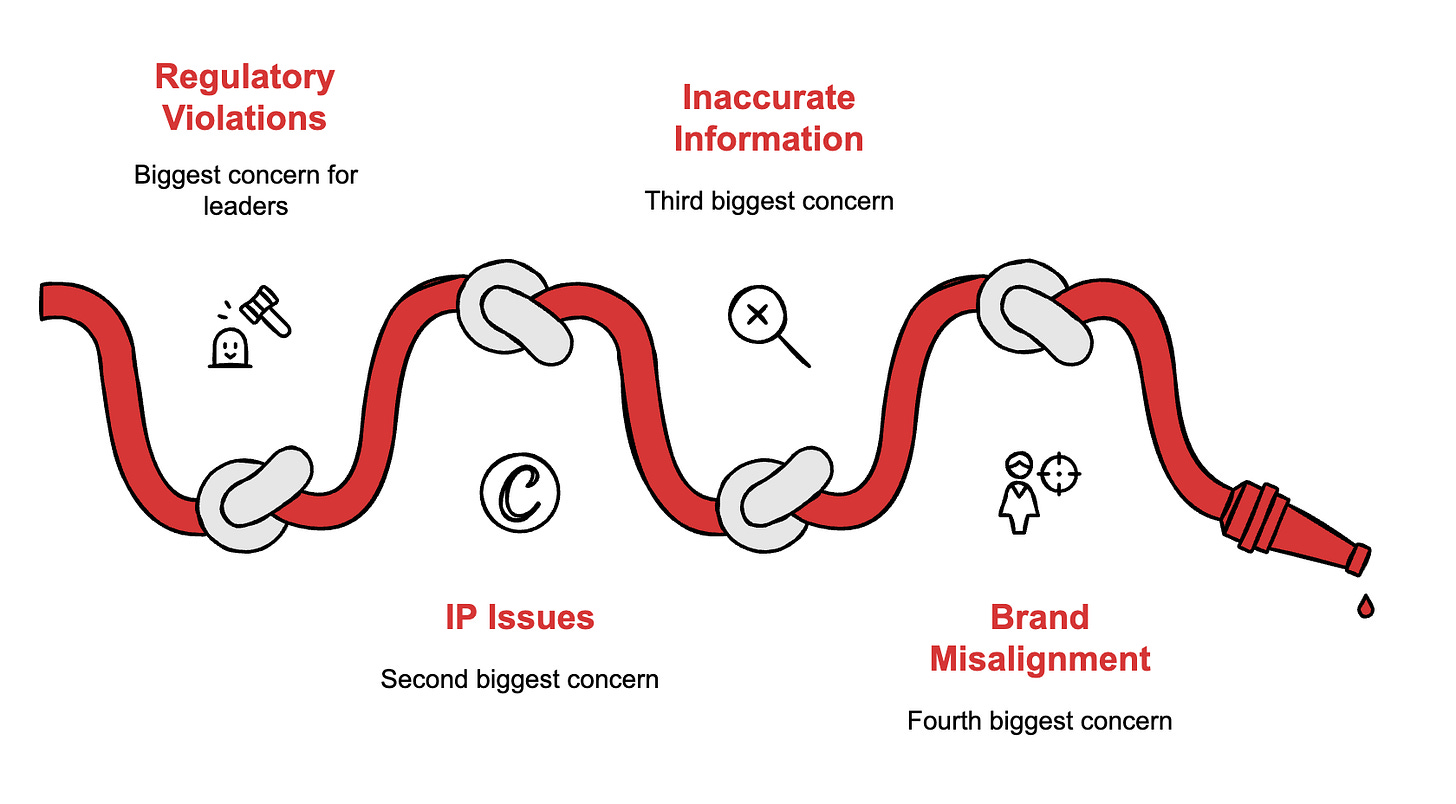

What leaders actually worry about

When asked their biggest concerns about AI-generated content, leaders ranked them: regulatory violations at 51%, intellectual property issues at 47%, inaccurate information at 46%, and brand misalignment at 41%.

Fifty-seven percent report their organisation faces moderate to high risk from unsafe AI content today.

One hallucinated statistic in a press release. One compliance violation in a product description. One off-brand message in a customer email. The speed that AI delivers can become the speed at which your reputation unravels.

A different kind of AI for a different job

Gartner’s head of research coined a term that’s gaining traction: Guardian Agents. The idea is simple. The only thing fast enough to monitor AI at scale is AI. But not the same AI.

A Guardian Agent is a separate system, purpose-built to check content against brand standards, compliance rules, and accuracy requirements. It doesn’t generate content. It evaluates it.

Matt walked me through how his company built Content Guardian Agents. Upload your brand guidelines and terminology dictionary. Connect the system through APIs or browser plugins. When content flows through, the agent scores it, flags risks, and either rewrites or routes to human review, but only for exceptions.

“You can configure it in about five minutes,” he told me. “Anytime a new document shows up in a repository, whether that’s GitHub, SharePoint, Google Drive, whatever your content system is, it checks the document and shoots the author comments in Slack flagging high-risk problems.”

That last part matters. Human in the loop was always meant for exceptions, not every piece of content. Otherwise, why bother with AI at all?

Want to see Content Guardian Agents in action? Markup AI is currently in early access mode. You can grab an API key and start testing for free at markup.ai.

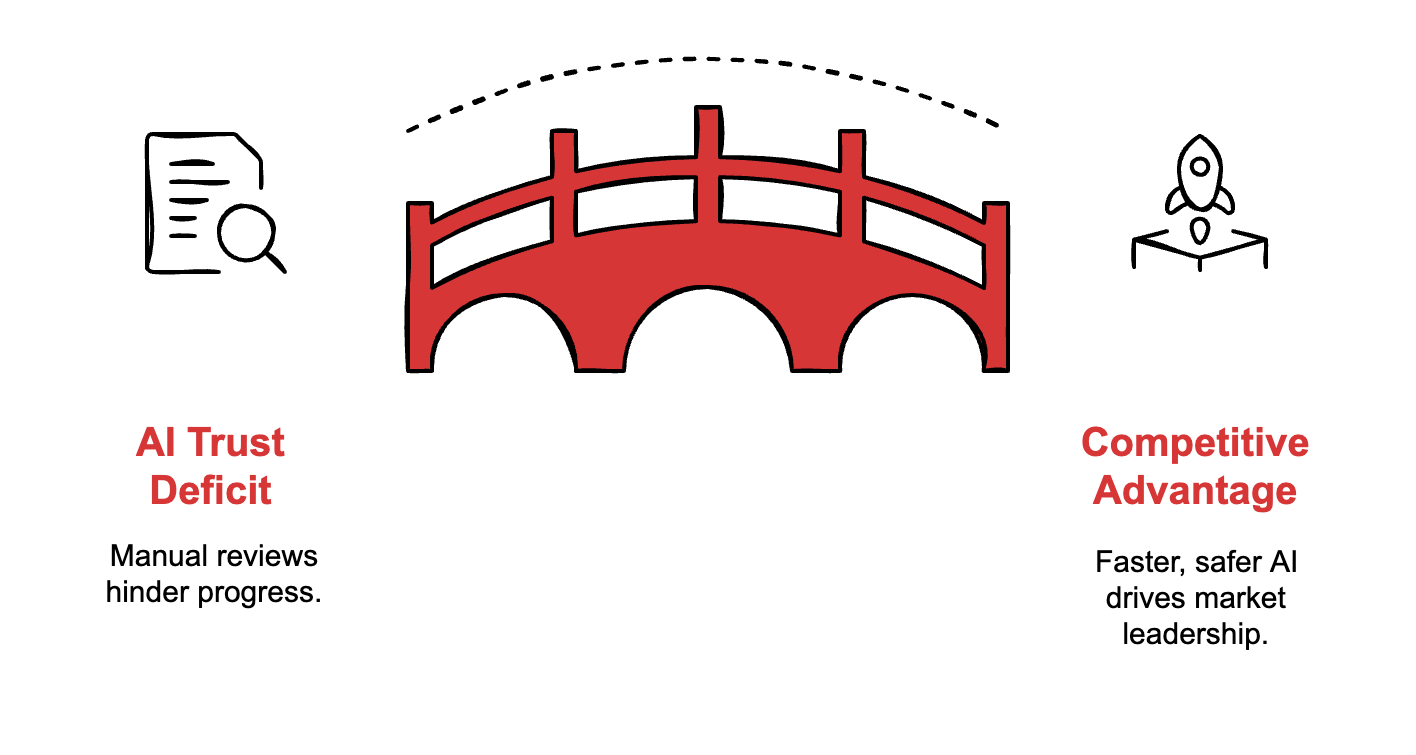

Governance as Competitive Advantage

I asked Matt what his experience across four companies taught him about AI governance. His answer surprised me.

“Companies that build quality in upfront win in the long run. It’s always harder to retrofit trust later. Once the genie is out of the bottle, you’re scrambling.”

He compared it to the early days of email marketing. Companies that did permission marketing instead of spam, that prioritised quality from the start, were the ones still standing a decade later. The burn-and-churn operators flamed out.

AI content will follow the same pattern. The organisations that figure out governance now, while everyone else is still manually reviewing everything, will move faster and safer than their competitors.

Gartner predicts that forty percent of CIOs will demand Guardian Agents within two years. The question isn’t whether this becomes standard. It’s whether you’re ahead of the curve or playing catch-up.

Your AI can write content. The question is who’s making sure it’s right.

Watch the full conversation with Matt in the video above to hear more about the technology adoption curve, why security will be the next frontier for Guardian Agents, and what enterprises need to codify before any of this works.

Adapt & Create,

Kamil

Would you like to talk about your company?