Hey Adopter,

I’m writing this from a hotel room in Charleston, South Carolina. It’s colder here than I expected.

Yesterday I ran a presentation for a company that’s asking the right questions. They’re not chasing the latest AI tools. They’re focused on human behavior. On culture change. On how their people need to adapt to get the most out of this technological shift.

Halfway through, I showed them a single research finding. The room went quiet.

Your expertise doesn’t predict your AI performance.

I watched faces shift. Some looked uncomfortable. Others looked relieved. Because if the problem isn’t your knowledge or your experience, then you actually have something specific to fix.

That’s what I want to talk about today.

OpenAI finally said it out loud

Last week, OpenAI released a report called Ending the Capability Overhang. The capability overhang is their term for the gap between what AI can do and what most people are actually getting out of it.

The tools are ready. The humans aren’t.

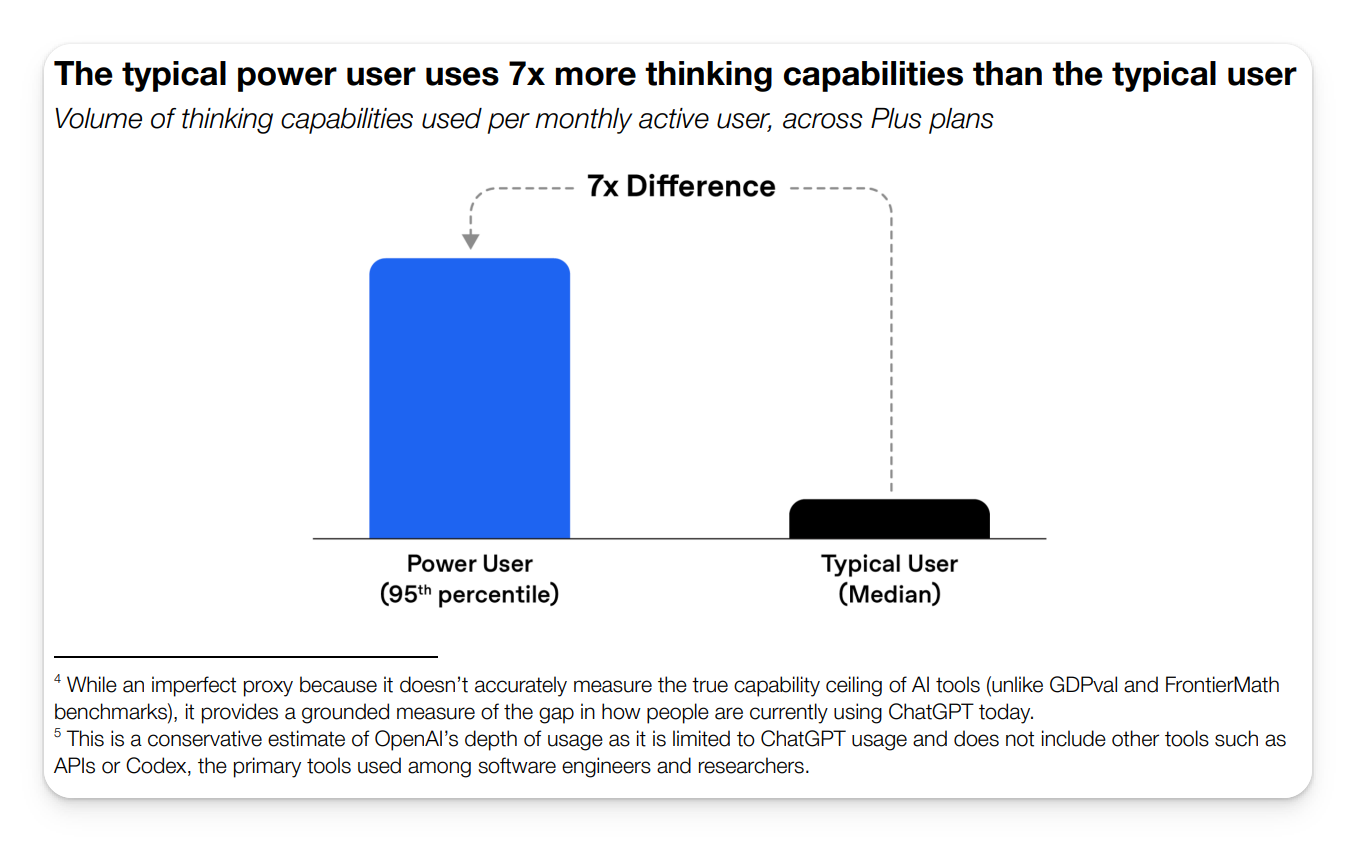

Their data shows power users extract roughly six to eight times more value from the same AI tools as typical users. Same subscription. Same model. Wildly different results.

This isn’t about using AI more. It’s about using it differently.

The research that changes how you think about AI skills

Researchers at Northeastern University and UCL tested 667 people. They measured performance alone, then performance with AI assistance.

The finding that should make you uncomfortable: being good at your job didn’t make people good at working with AI.

Some average performers saw massive gains when AI joined them. Some top experts barely improved at all. Years of experience, advanced degrees, deep domain knowledge, none of it predicted who would benefit most.

The people who got results weren’t smarter. They weren’t more senior. They were doing something different.

What the winners did differently

The researchers found one habit that separated high-gainers from everyone else. They called it Theory of Mind. The ability to step into another perspective.

In practice, it looked like three things.

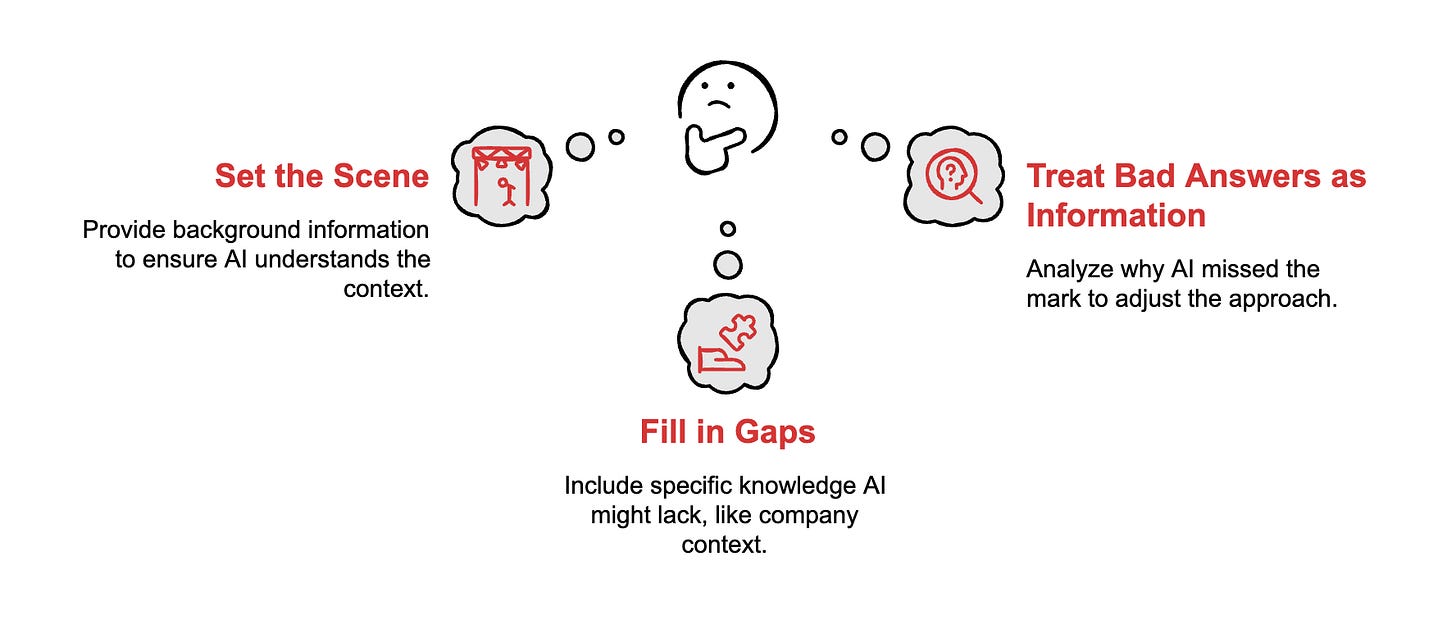

They set the scene. Before asking anything, they gave background. Who they are, what they’re working on, who the output is for.

They filled in gaps. They asked themselves: what do I know that the AI doesn’t? Then they included it. Company context, internal jargon, what they’d already tried.

They treated bad answers as information. When the AI missed the mark, they didn’t just rephrase and retry. They figured out why it missed. Did it misunderstand the goal? Lack a constraint? Then they adjusted.

This is what separates someone who uses AI from someone who collaborates with it.

Build your Human API

I call this skill your Human API.

An API is an interface. It’s how one system talks to another. Your Human API is how well you translate what’s in your head, your expertise, your context, your judgment, into something AI can actually work with.

Your expertise is table stakes. The multiplier now is how clearly you communicate with the machine.

And unlike your IQ, unlike your years of experience, this is a skill you can build.

I recorded a short video walkthrough of this concept. It covers the research, the three-question protocol, and what this means for your work. Watch it here if you want the full breakdown.

The 10-second protocol

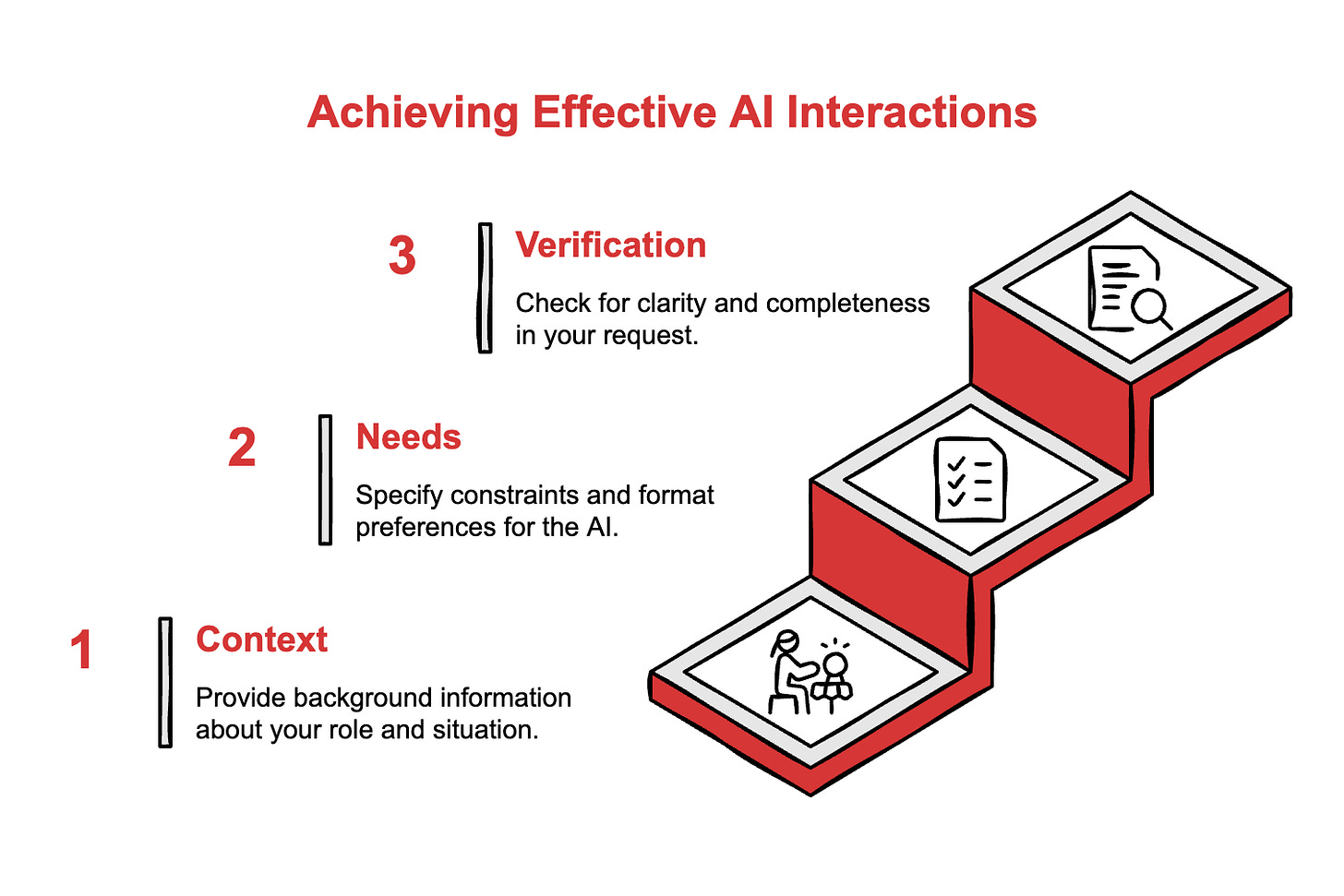

Before any important AI request, run this checklist. Takes ten seconds.

Context. What am I holding that the AI doesn’t have? Your role. The situation. What you’ve already tried. Who the output is for.

Needs. What does the AI need to know to give me something useful? Your constraints. Format preferences. What “good” actually looks like.

Verification. If this answer misses the mark, what will I check first? Did I explain the goal clearly? Did I leave out something obvious to me but invisible to the machine?

Three questions. Ten seconds. Changes every interaction.

Two prompts to build your Human API

I want you to try something before you close this email. Here are two prompts you can use today.

Prompt 1: Diagnose your Human API

Copy and paste this into ChatGPT or Claude:

I want to evaluate how effectively I communicate with AI tools, what I call my "Human API."

If you have memory of our past conversations, analyze the patterns you've observed:

- How clearly do I typically provide context about my role, situation, and goals?

- Do I explain constraints and success criteria, or leave you guessing?

- When outputs miss the mark, do I diagnose what went wrong or just rephrase the same question?

- What context do I consistently forget to share that would help you help me?

If you don't have memory of our past interactions, interview me instead:

- Ask me 5-6 questions about how I typically use AI tools

- Probe for specific examples of recent interactions

- Then diagnose where my Human API breaks down

End with three specific recommendations for improving how I communicate with AI, ranked by impact.Prompt 2: Build your Human API profile

After you run the diagnostic, use this to create something you can reuse:

Help me build my "Human API," a profile that captures how I work so AI tools can serve me better.

Interview me with 5-6 focused questions covering:

- My role and what I'm accountable for

- The domain expertise I bring, what I know that most people don't

- My most common tasks where AI could help

- How I prefer to receive information, format, length, tone

- What "good work" looks like in my context

After the interview, produce a one-page "Human API Profile" I can paste into future AI conversations or save as custom instructions. Keep it concrete and usable, not generic.

Start with your first question.Why this matters now

The winners of the Intelligence Age won’t just be the smartest people in the room. They won’t be the ones with the most credentials or the longest resumes.

They’ll be the ones who communicate best with the machine.

OpenAI knows this. The research confirms it. And the companies asking the right questions are already acting on it.

Build your Human API. Start now.

Adapt & Create,

Kamil