When leadership says “go” but means “figure it out yourself”

Why AI adoption stalls even when everyone agrees it’s necessary

Hey Adopter,

This piece will help you identify whether your AI initiative is heading toward slow suffocation before it’s too late.

You’ll learn the difference between leadership enthusiasm and leadership commitment, why contradictory signals from the top guarantee fragmented adoption, and the two questions that reveal whether your organisation is set up for AI success or quiet failure.

If you’re a senior executive, this will show you what your team actually experiences when you approve AI “in principle.” If you’re championing AI from inside, this will give you language to explain why things aren’t working despite everyone’s good intentions.

I want to hear from you

Are you the executive in this story, or the internal champion?

Hit reply and tell me which one. I read every response.

The slow suffocation

A national construction firm hired me to help them adopt AI. Leadership was convinced they needed it. They were right. Their competitors were moving fast, and their own IT infrastructure, data systems, and processes lagged behind.

Enthusiasm existed at the top. This wasn’t a company resisting change. They wanted AI. They asked for training.

Here’s what they didn’t provide: tools to train on.

Budget wasn’t clearly approved. Different leaders gave different answers about what employees could and couldn’t use. When people asked “what AI tools are we allowed to access,” the response depended entirely on who they asked.

So employees did what employees always do when the official path is unclear. They used their own tools. Shadow AI. Personal ChatGPT accounts. Free trials. Whatever they could access without asking permission.

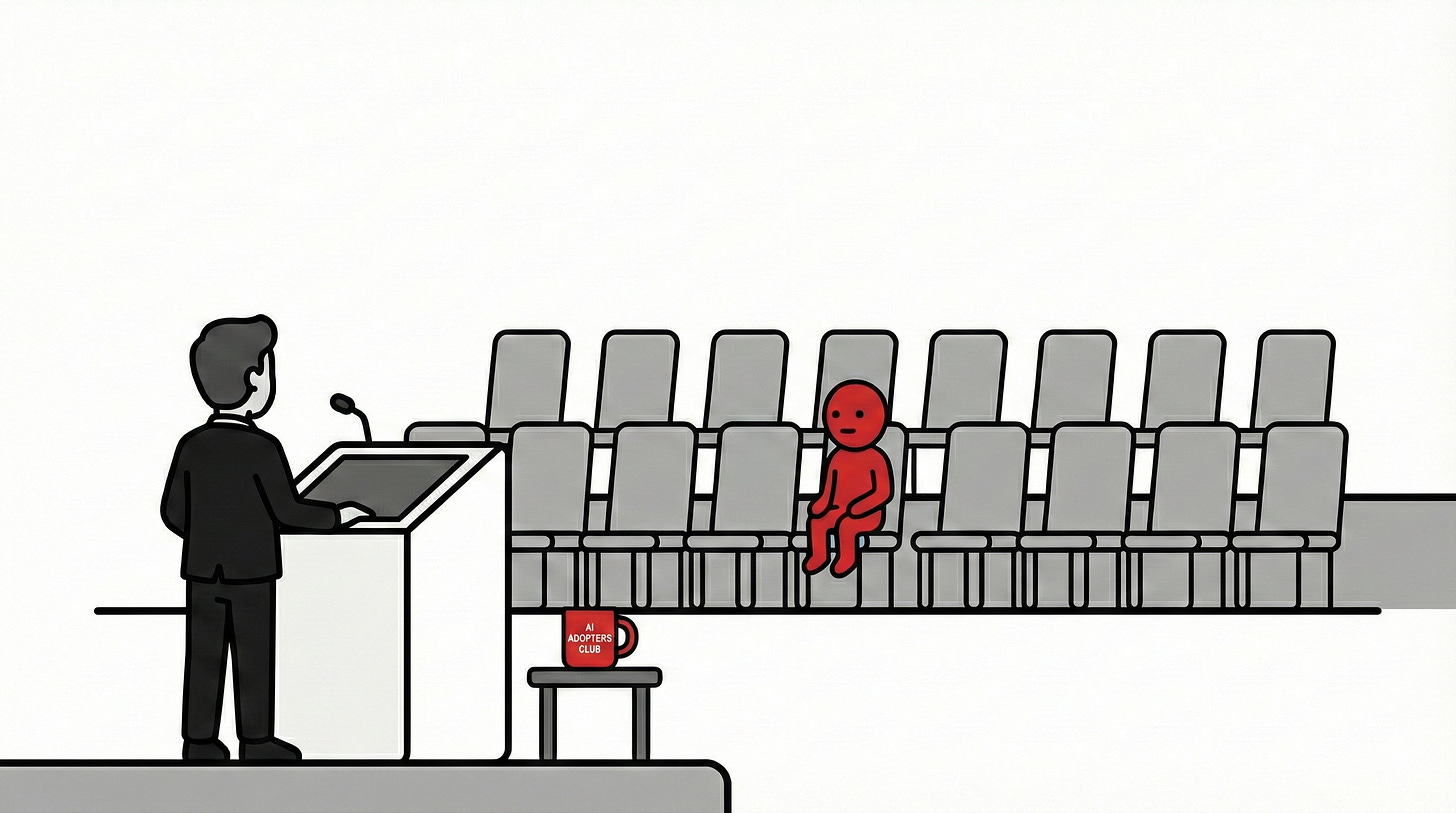

The moment I knew

Two months in, I scheduled a hands-on AI clinic. Sent the invite to 25 people across the organisation.

One person showed up.

Granted, it was a busy time of year. But one out of twenty-five told me everything I needed to know. This wasn’t about calendars. AI had already become something people avoided. Another obligation. Another unclear initiative that nobody was sure leadership actually supported.

The enthusiasm from the top hadn’t translated into action on the ground. And without clear tools, clear rules, and clear ownership, people had quietly decided this wasn’t worth their time.

What fragmented adoption looks like

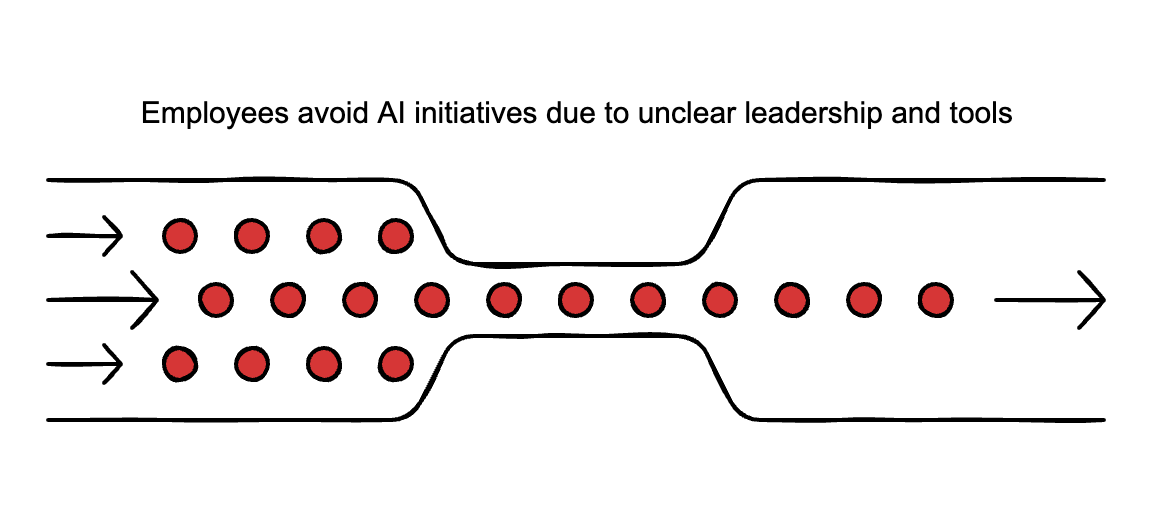

The result wasn’t zero adoption. It was worse. Scattered adoption across silos. Small wins nobody else could see. No shared learning. No visibility into what was working. No consistency in how AI was being used or what data was being processed.

Some teams had figured out useful workflows. But those wins stayed locked inside individual departments. The person in operations using AI to speed up reporting had no idea the person in procurement had built something similar. Duplication everywhere. Momentum nowhere.

Three months in, the initiative had stalled. Not dramatically. Quietly. It slipped to the back burner while everyone waited for someone else to clarify the rules.

The gap between enthusiasm and commitment

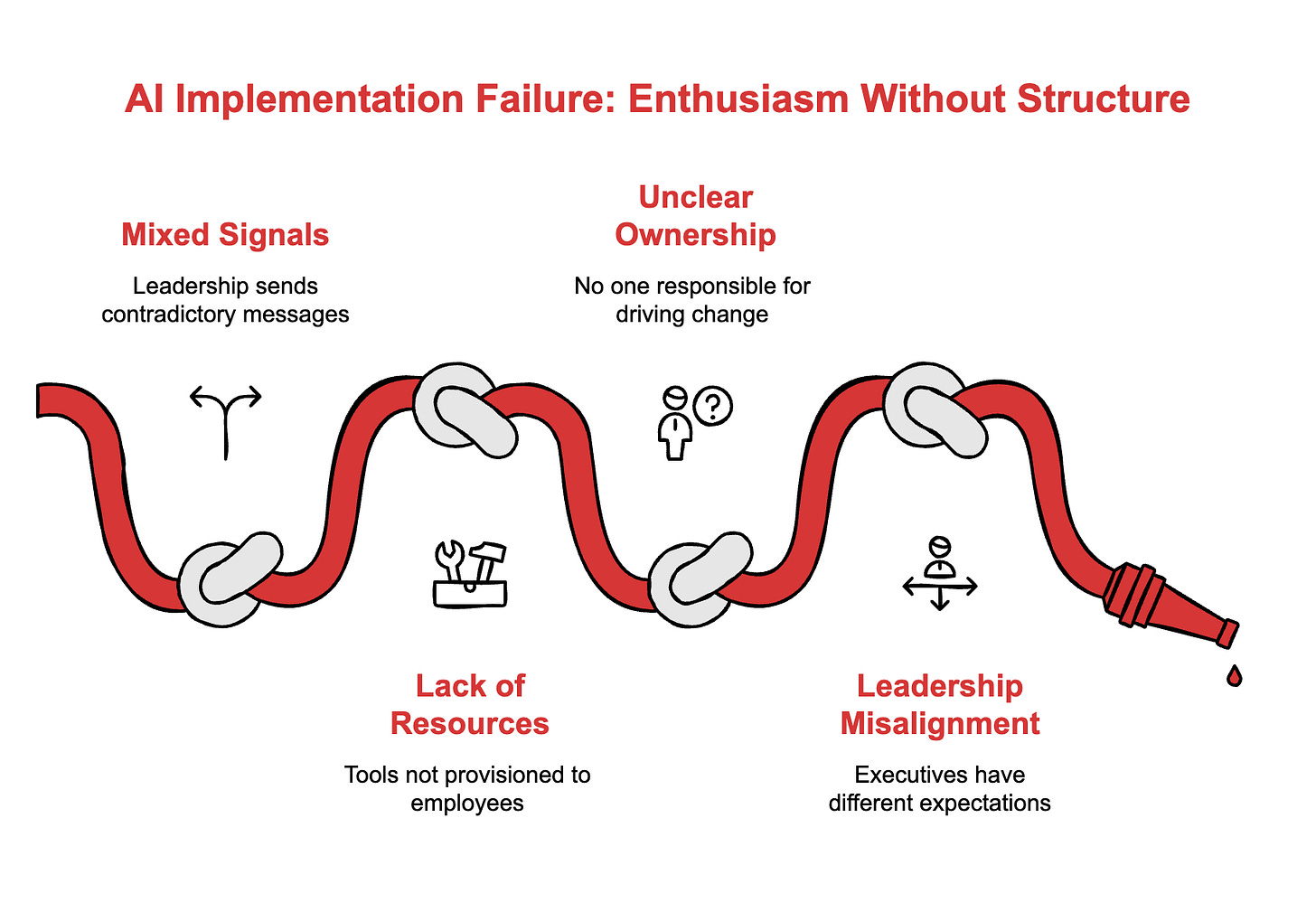

This company didn’t fail because people resisted AI. They failed because leadership gave enthusiasm without structure.

Saying “we need AI” is not the same as approving a budget. Approving a budget is not the same as provisioning tools. Provisioning tools is not the same as establishing clear data governance. And none of it matters without assigning real ownership to people with actual authority to drive change.

63% of organisations cite human factors as a primary challenge in AI implementation. That statistic sounds like it’s about employee resistance. It’s not. It’s about leadership sending mixed signals and expecting adoption to happen anyway.

The construction firm didn’t have a technology problem. They had a leadership alignment problem. Multiple executives with different expectations, different risk tolerances, and different assumptions about who was responsible for what.

You can’t drive change from the outside when the inside is sending contradictory messages. And you can’t train people to use tools they don’t have access to.

What should have happened

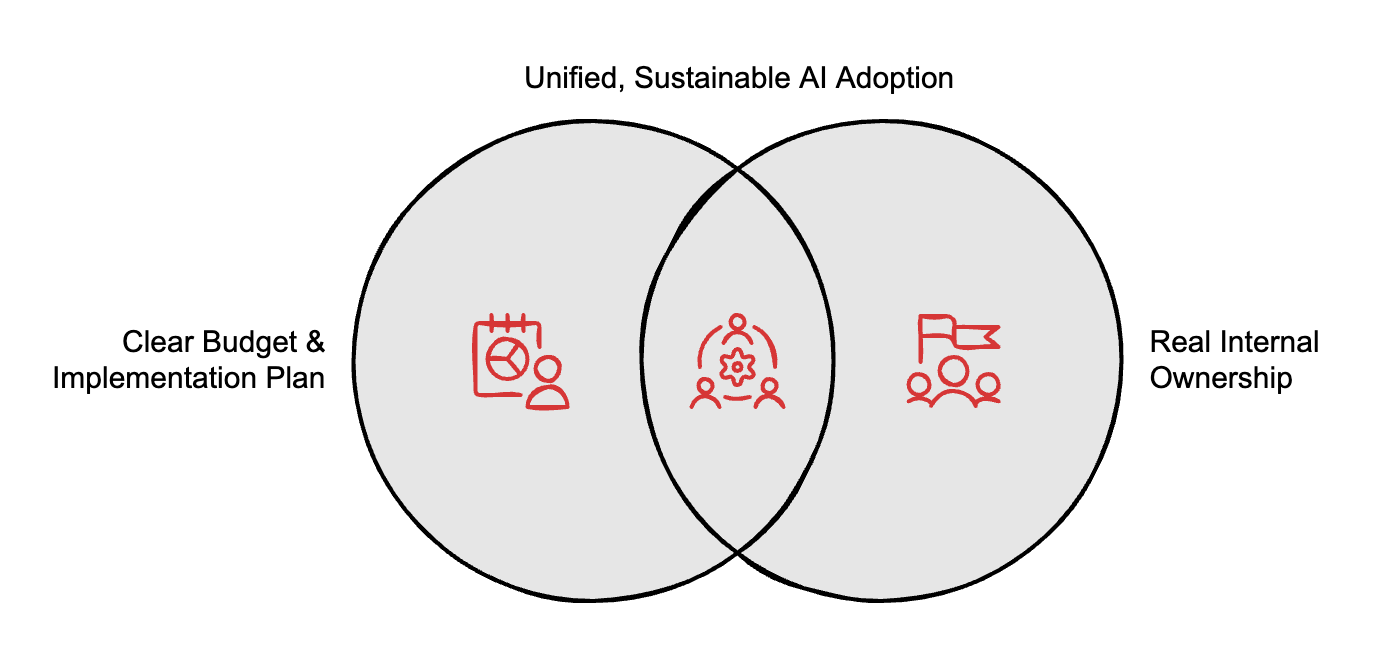

Looking back, two things would have changed everything.

First, a clear budget and implementation plan before training began. Not “we’ll figure out the tools later.” Specific answers to specific questions. What platforms are approved? What’s the monthly cost per seat? What data can and cannot be processed? Who signs off on new tool requests?

Second, real ownership assigned to internal champions. Not just enthusiastic volunteers. People with authority. People whose job descriptions included AI adoption, not people doing it on top of their existing workload. Flag carriers on the inside who could answer questions, surface blockers, and keep momentum when the external advisor wasn’t in the room.

Without these two things, you get fragmentation. You get shadow AI. You get frustration. And eventually, you get an initiative that nobody officially killed but nobody is actually driving.

The pattern behind the pattern

This construction firm isn’t unique. 74% of companies haven’t seen real value from their AI initiatives despite the spending. 42% abandoned their AI initiatives entirely in 2025.

Culture and leadership alignment is just one failure point. Data quality, governance gaps, strategy-reality mismatch, and unclear ROI all kill AI projects too. I’ll dig into each of those in future issues.

But culture comes first because it’s the most invisible. Bad data shows up in reports. Integration failures throw errors. Leadership misalignment just looks like “slow adoption” until you’re three months in and realise nothing is actually moving.

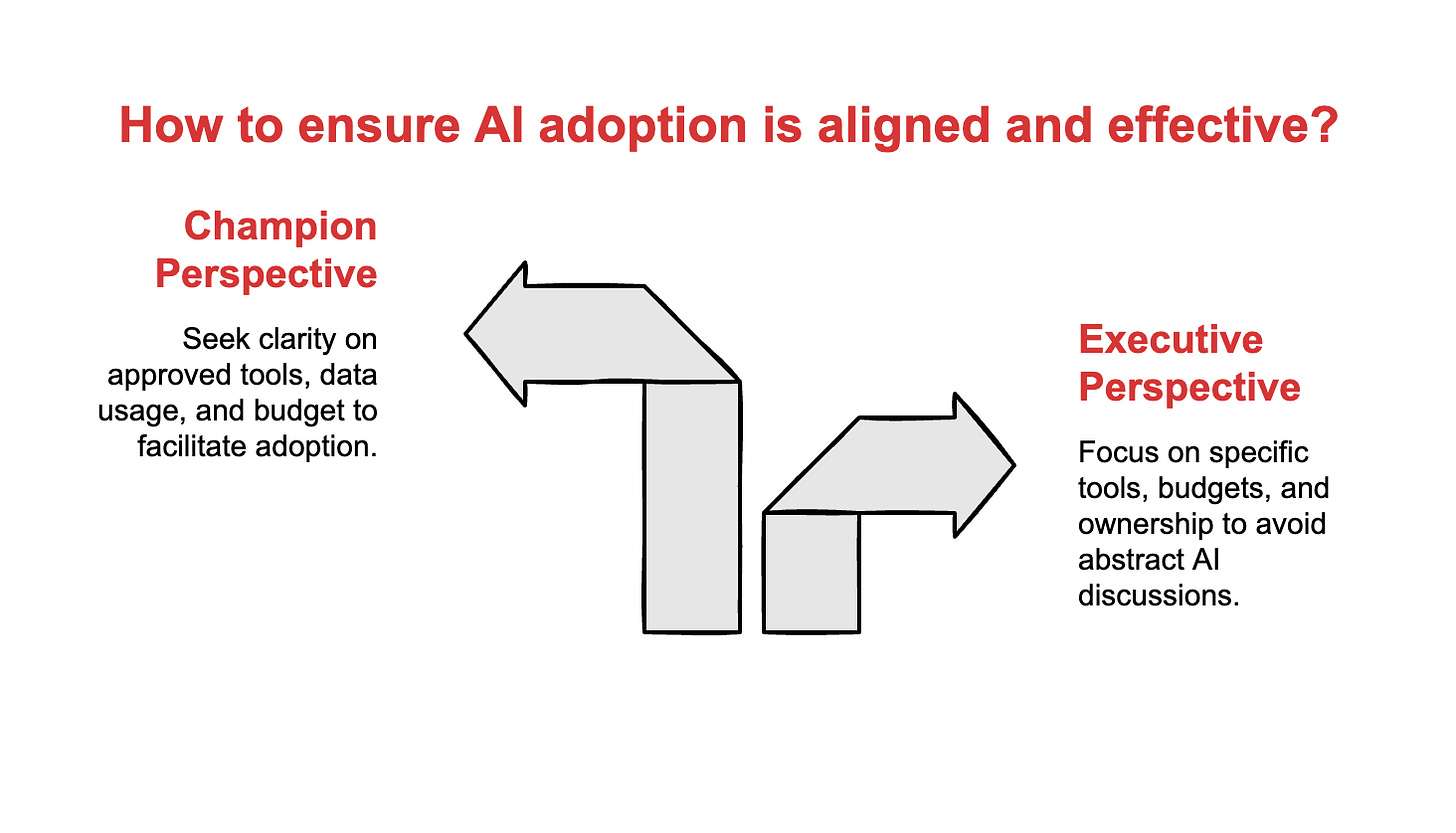

The diagnostic

If you’re a senior executive, ask yourself:

Have we approved specific tools with specific budgets, or just “AI” in the abstract?

If an employee asked three different leaders what AI tools they can use, would they get the same answer?

Who owns AI adoption as part of their actual job, not as a side project?

Do our people have access to the tools we expect them to learn?

If you’re championing AI from inside, ask your leadership:

What specific tools are approved for use?

What data can and cannot be processed through these tools?

Who has authority to make decisions when questions arise?

What budget exists for team accounts, and when will it be available?

If you can’t get clear answers, you’ve found the problem. It’s not adoption. It’s alignment.

Download the Diagnostic Prompts - Use them before your next leadership meeting

One more thing

If this sounds like your company, send it to whoever needs to read it. Sometimes the person who can fix the problem doesn’t know the problem exists.

Adapt & Create,

Kamil

Let's hope more firms are able to apply the lessons from 2025 in 2026!