How to Use AI Safely at Work (Without Exposing Company Secrets)

A step-by-step guide that takes 15 minutes to implement

Consultants advising on AI safety face a critical paradox: how do you use AI tools without exposing client data? This article provides a practical three-tier approach that secures your own AI usage while creating a billable service framework for clients. The comprehensive prompt gives you both personal protection and a ready-made methodology to turn AI governance into revenue; letting you practice what you preach while building expertise that clients will pay for.

Hey Adopter,

Samsung engineers pasted proprietary source code directly into ChatGPT. The data became visible to other users. Their "AI security" was a checkbox exercise that cost them competitive secrets.

This wasn't an edge case. McDonald's AI chatbot exposed 64 million job applicants' personal information in July 2025 because someone used "123456" as the password. Meanwhile, ChatGPT's 2023 breach exposed partial payment data of 1.2% of Plus users.

The problem is accelerating. AI contributed to a 5% increase in publicly reported data compromises this year alone. Yet professionals keep pretending their data handling protocols actually work. They don't.

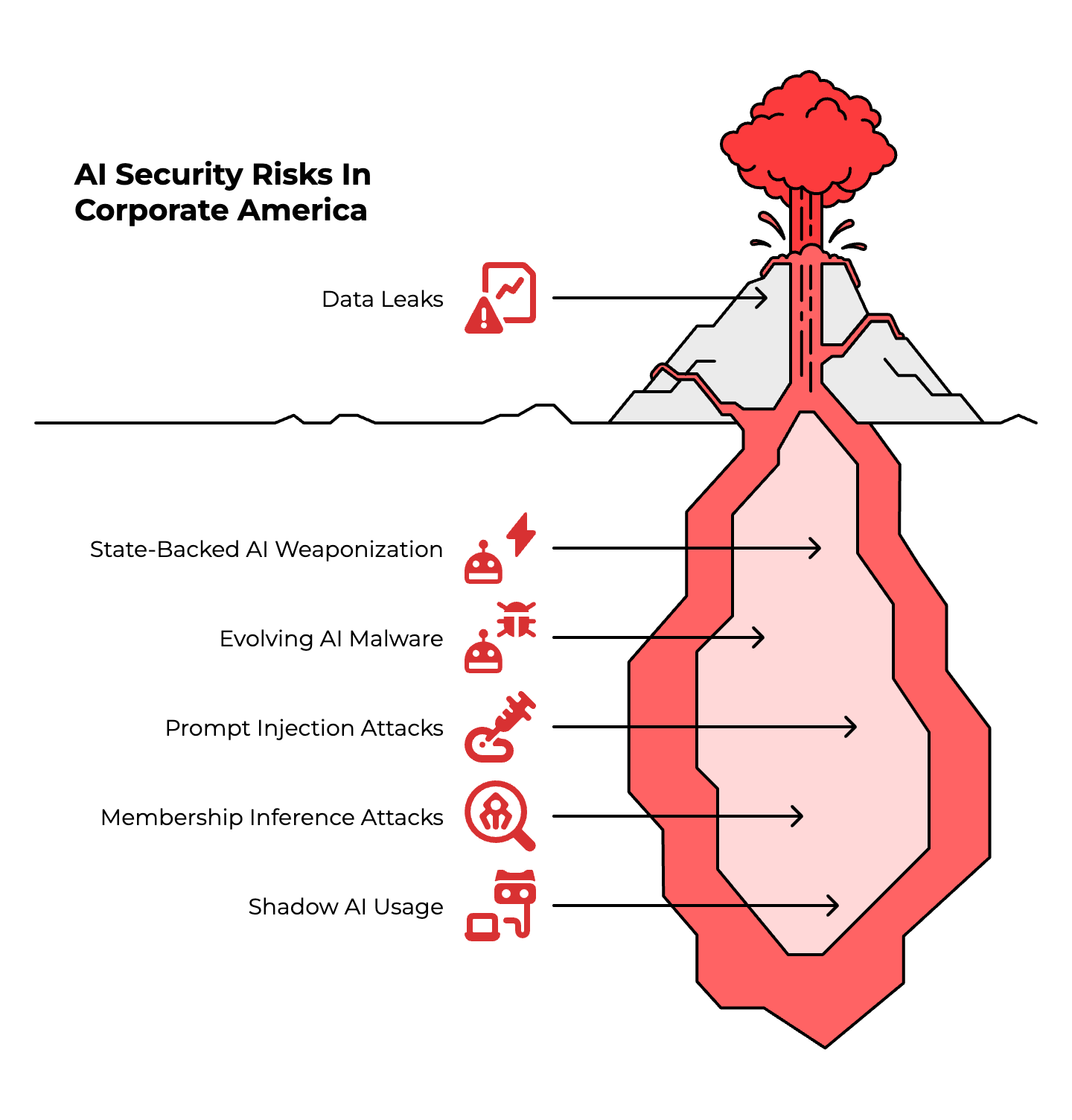

The real threat isn't what you think

Most security discussions focus on abstract risks. Here's what's actually happening right now:

Employee AI usage is hemorrhaging data. 68% of organizations experienced data leakage from employee AI usage, while 99% of companies expose sensitive data to AI copilots. A single prompt can leak critical information.

State-backed actors are weaponizing AI tools. OpenAI dismantled multiple ChatGPT accounts linked to hackers from China, Russia, North Korea, and Iran who were using the platform for malware development and disinformation campaigns.

AI-powered malware is evolving faster than defenses. The BlackMatter ransomware uses AI to analyze victim defenses in real-time and adapt encryption strategies. Attackers now use AI to analyze stolen data for more precise attacks and create more effective phishing emails.

Prompt injection attacks can extract training data for pocket change. Researchers demonstrated extraction of 10,000 training samples for just $200. Your carefully crafted prompts? They're leaking company information you didn't even know you shared.

Membership inference attacks let attackers determine if specific records were in a model's training set. This isn't theoretical. It's a documented privacy attack vector that violates GDPR and HIPAA compliance faster than you can say "reasonable precautions."

Shadow AI usage across your team creates data flows you can't track. Google Cloud warns that acceptable use policies are essential because employees will use unsanctioned tools regardless of official policies.

The McDonald's breach happened because they had no meaningful controls. Most organizations are one careless prompt away from the same fate.

Why current security approaches fail

IT departments love complexity. They create elaborate frameworks that nobody follows because they're impossible to implement during actual work.

The typical corporate AI security protocol looks like this: "Don't put sensitive data in AI tools." Period. End of guidance.

This approach fails because it ignores how modern work actually happens. You need AI to stay competitive. Your stakeholders expect AI-powered insights. Blanket restrictions create workarounds that are less secure than thoughtful protocols.

Enterprise blocking doesn't solve the problem. Enterprises now block or restrict public LLMs, but this just pushes usage underground. Employees find ways around blocks because they need AI to get their jobs done.

Compliance theater wastes resources. Teams spend months implementing ISO frameworks without understanding what actually protects data. ISO 42001 requires 38 specific controls, but most organizations focus on documentation instead of practical protection.

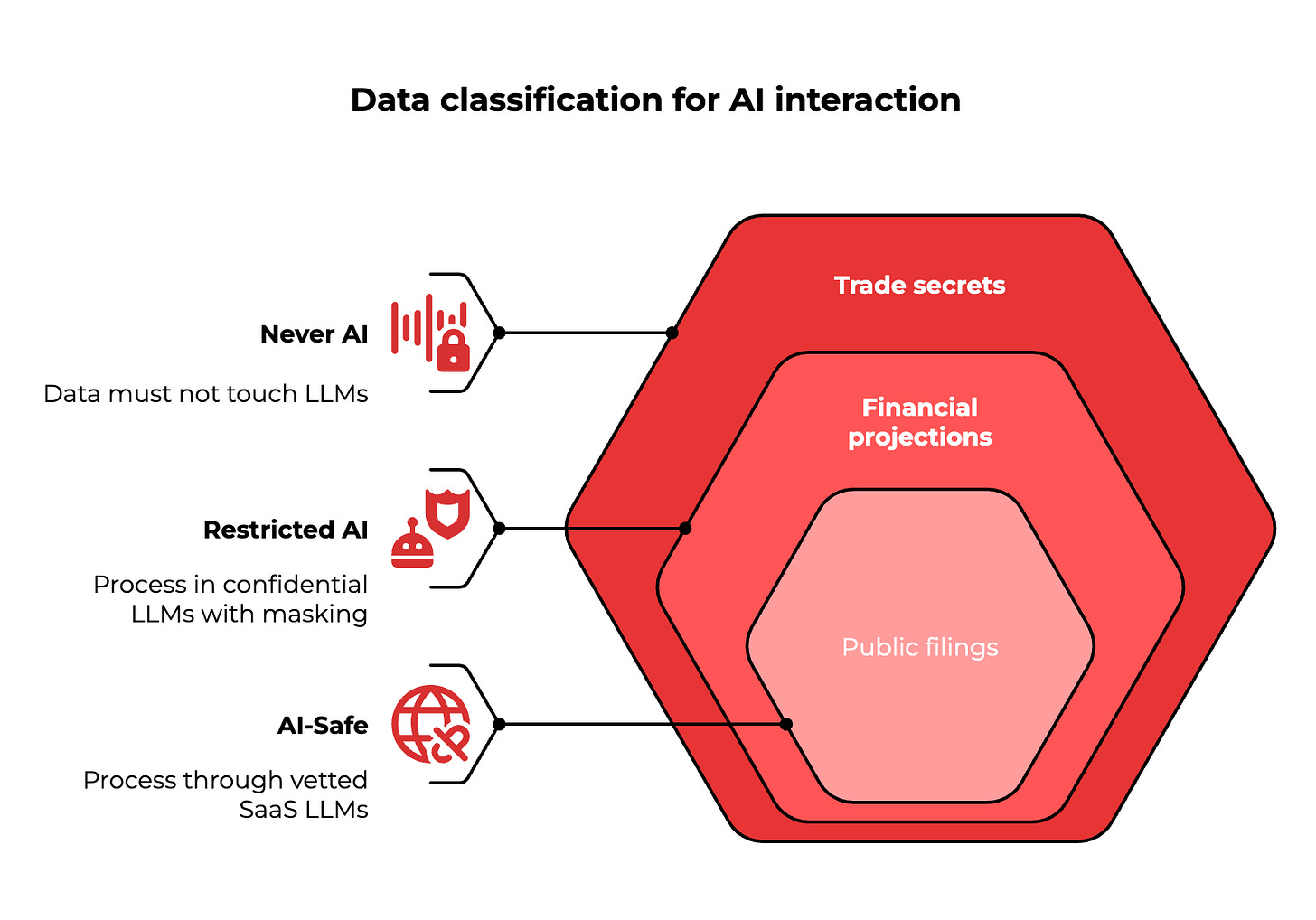

What actually works: the three-tier approach

Smart professionals classify data into three categories before any AI interaction:

Never AI: Trade secrets, unredacted personally identifiable information, legal strategies, confidential customer data. This data must not touch any external or internal LLM. Store it in encrypted systems and limit access strictly.

Restricted AI: Financial projections, anonymized datasets, competitive analysis, internal process documents. Allow processing in private or confidential LLMs with proper masking. Azure OpenAI on VNET keeps data within your organization's boundaries.

AI-Safe: Public filings, industry reports, general research, published content. Process through vetted SaaS LLMs with clear data retention policies. Use tools like ChatGPT Team mode that disable training on your inputs.

This isn't a theoretical classification. It's a decision framework that works during actual business operations.

Practical protocols that teams actually use

Deploy automated data discovery. Use AI-assisted classification tools to scan shared drives and tag files before they enter any workflow. This prevents accidental exposure of sensitive material.

Implement masking and tokenization for restricted data. Before sending information to any LLM, mask direct identifiers using reversible tokenization methods. Specialized guardrails tools handle this automatically.

Create prompt sanitization workflows. AWS prescriptive guidance recommends salted XML tags and input validation to prevent injection attacks. Build these into your standard operating procedures.

Establish clear communication protocols. Use secure channels for sensitive discussions. Route working documents through approved platforms with proper access controls. Reserve public LLMs for AI-safe research only.

Document everything. NIST AI Risk Management Framework requires measurable metrics and continuous improvement. Track which data enters which systems. This isn't bureaucracy, it's evidence that your protocols actually protect business interests.

The professional advantage

While large organizations struggle with complex security theater, individual professionals and small teams can implement practical protocols immediately. You control your tools, your workflows, and your data classification.

OWASP's GenAI security checklist provides specific technical controls for LLM applications. Follow their guidance for prompt injection mitigation, data leakage prevention, and supply chain security.

The goal isn't perfect security. It's demonstrable protection that maintains productivity while safeguarding organizational trust.

Most professionals treat AI security as an obstacle to work around. Smart professionals treat it as a competitive advantage that enables deeper stakeholder relationships and career advancement.

Your approach determines whether you're the person leadership trusts with sensitive projects, or the one they keep away from anything important because your data handling looks like Samsung's.

Implementation protocol

Use this prompt to create your comprehensive AI security protocol: