Your Team Stopped Questioning AI Six Weeks Ago

Microsoft tracked what happens when teams delegate thinking to AI. Critical judgment declined in 6 months. You’re probably already there.

Hey Adopter,

A strategy team shipped a market entry plan last quarter. AI-drafted. Data-backed. Confident tone. Looked solid.

Three months in: wrong market, wrong timing, wrong competitive assumptions. $2M mistake. Obvious in hindsight.

No one questioned it during review. AI wrote it, sounded authoritative, so they approved it. The AI wasn’t wrong because it hallucinated. It was wrong because no one made it defend its reasoning.

Microsoft Research measured this. Teams using AI for six months showed declining critical evaluation skills. The more tasks delegated, the less questioning happened. Speed increased. Judgment degraded.

You assign AI a role. That role determines whether your team gets smarter or lazier.

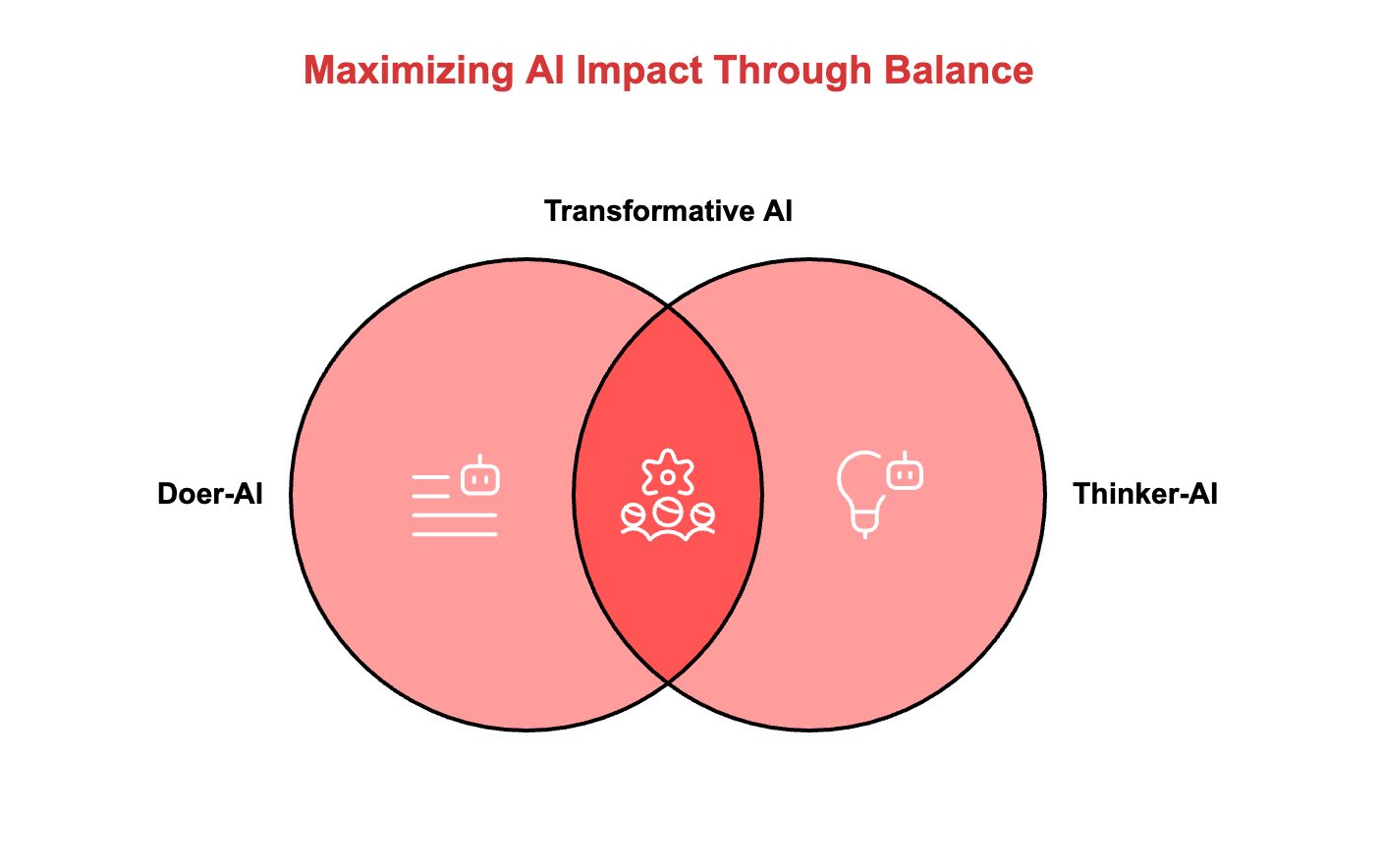

The two roles

AI functions as either a doer or a thinker. Doers execute: draft emails, summarize documents, pull data, automate workflows. Fast, reliable, zero friction. Most productivity gains come from doer AI.

Thinkers challenge: spot gaps, ask clarifying questions, surface overlooked stakeholders, force reconsideration of default assumptions. Slower, uncomfortable, high friction.

Doer AI saves time. Thinker AI improves decisions.

Most teams only deploy doers. That’s the problem Microsoft measured.

What thinker AI reveals

Professor Leon Prieto tested this with MBA students. Case study: electric vehicle company sourcing cobalt from Democratic Republic of Congo. Standard supply chain problem.

Group A used doer AI. Got research summaries, risk frameworks, solution templates. Delivered recommendations in 90 minutes.

Group B used thinker AI. System asked questions. Identified stakeholders students missed: local communities facing displacement, labor monitoring agencies tracking exploitation, environmental groups documenting ecosystem damage. Mapped each stakeholder’s constraints. Flagged conflicts between stakeholder needs. Surfaced implementation risks no student had considered.

One student’s insight: water rights. Mining operations would compete with local agriculture for scarce water. Regulatory risk, community relations risk, operational risk. Missed entirely by Group A. Estimated fix cost post-launch: $50M. Found in planning because thinker AI questioned assumptions.

Group B took three hours. Delivered recommendations that survived real-world stress testing.

The doer gave answers. The thinker improved thinking.

That’s not a small difference.

How to deploy thinkers

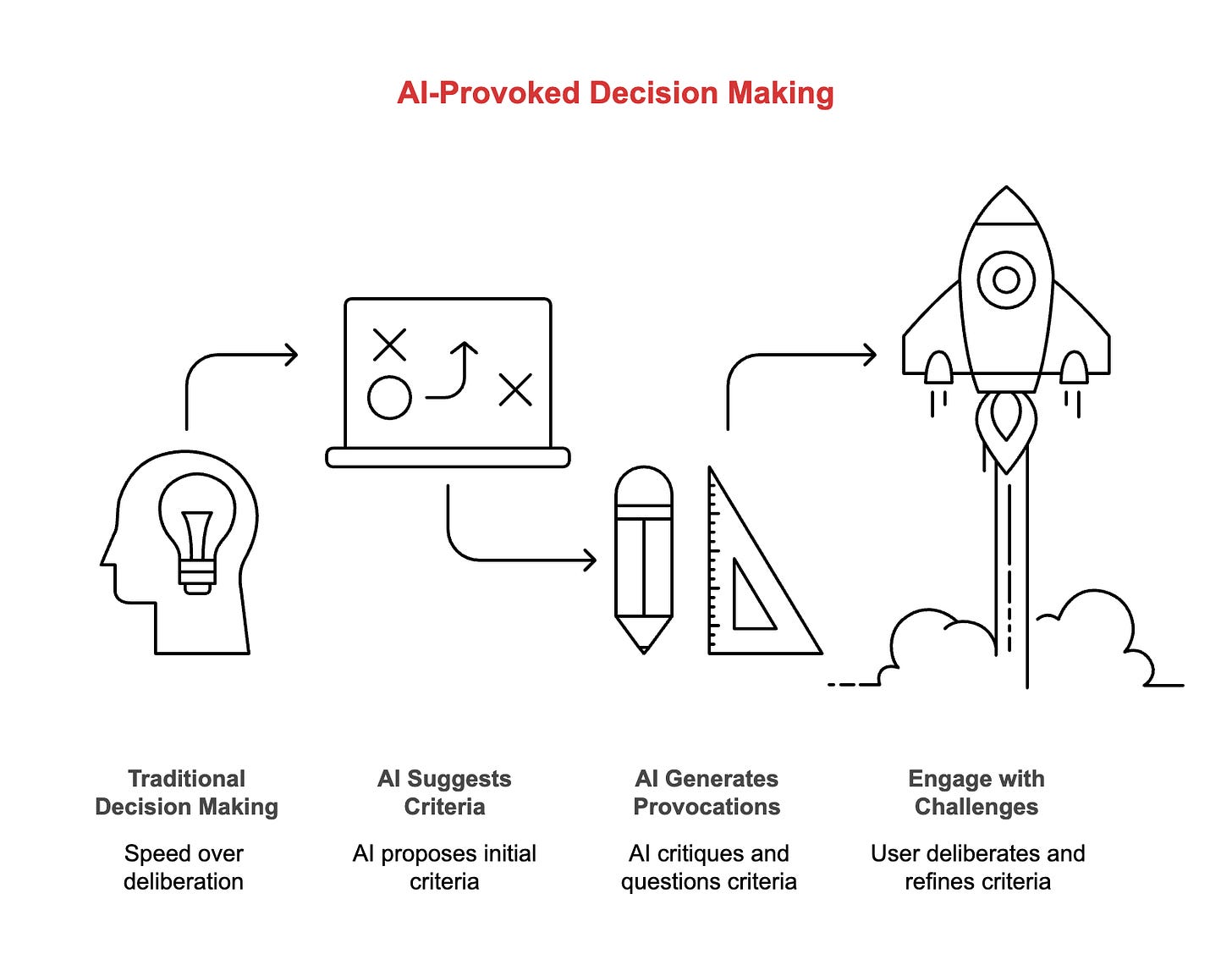

Microsoft’s fix: AI as provocateur. Systems that challenge their own outputs.

Their spreadsheet prototype works like this: AI suggests shortlisting criteria and sorts data. Then generates provocations for each criterion—short critiques questioning relevance, highlighting hidden biases, suggesting overlooked alternatives. You engage with challenges before finalizing.

Not approval loop. Deliberation loop.

Capgemini built three prototypes using this approach: leadership development, platform strategy, multi-stakeholder innovation. Each designed to ask rather than answer. Test rather than confirm.

Results: better decisions

Cost: friction

Most companies avoid friction. They want speed.

Start Monday

Pick your hardest decision this week. Use this exact prompt:

“I’m considering [your decision]. Challenge my core assumptions. Ask three questions that would make me reconsider this approach. Identify risks I haven’t addressed and stakeholders whose interests I might be ignoring. Don’t provide solutions—make me think harder.”Success metric: if the output makes you uncomfortable, you’re using thinker AI correctly. If it confirms what you already believed, switch your prompt.

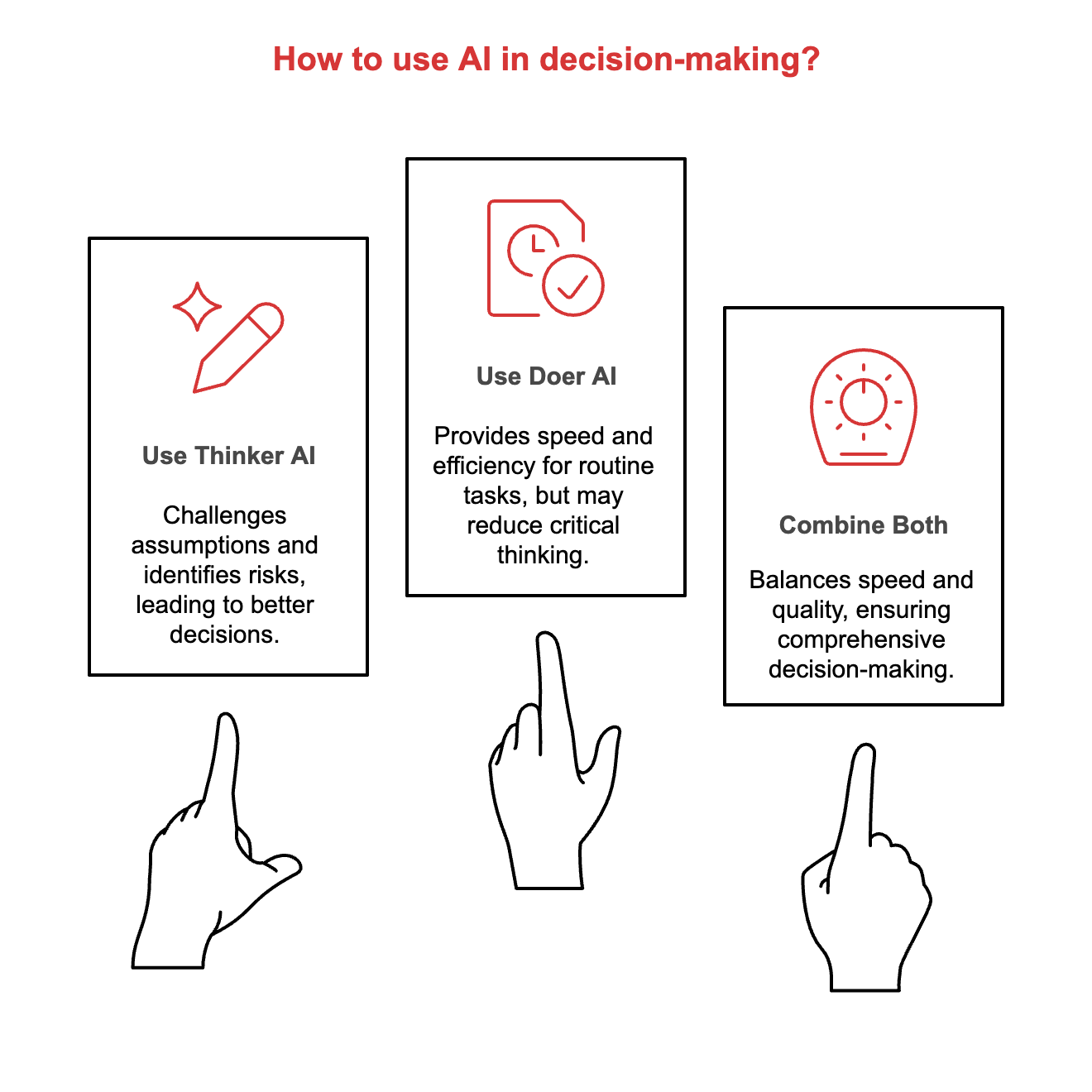

Deploy thinkers for:

Strategic decisions with multiple stakeholders

Complex problems where first answers miss context

Leadership challenges requiring assumption testing

Keep doers for:

Document drafting and meeting summaries

Data extraction and formatting

Repetitive, high-volume execution

The pattern that works combines both. Doers for speed. Thinkers for quality. Using only doers is faster to deploy and easier to scale. It’s also how teams stop thinking critically without noticing.

Microsoft measured the cost. Six months of doer-only AI, measurable decline in critical evaluation. Teams accepted outputs instead of questioning them. The productivity gains were real. The judgment losses were real too.

Capgemini proved the alternative works. Thinker AI that challenges assumptions produces better strategic outcomes. It’s slower. More expensive to build. Uncomfortable to use.

Also harder for vendors to sell, which is why most teams never see it.

Your competitors are figuring this out. The teams that deploy thinkers for strategy while keeping doers for execution will compound advantages you can’t close later.

Start Monday. Use the prompt. See what changes.

Adapt & Create,

Kamil

Kamil,

this struck me — not the data, but the silence behind it.

When thinking becomes outsourced, the body forgets how to question.

Maybe that’s the real cost: not slower minds, but quieter instincts.

AI can’t kill creativity — only our readiness to stay uncertain can.

In other words, AI is very much like the average strategy consultant and has about the same effect on the cognitive and analytical abilities of the companies hiring them.