Your AI gives everyone the same answer. Here's how to get the good ones it's hiding.

One prompt change makes your proposals and memos stand out from competitors.

Hey Adopter,

A Stanford team found that a single prompting change recovers most of the creative diversity that safety training stripped from your AI assistant. No retraining. No code. Copy one prompt template, and your brainstorming sessions get five times more raw material.

What the research proved

Researchers from Stanford and Northeastern tested why ChatGPT, Claude, and Gemini produce the same predictable outputs. The answer: humans rate familiar text higher than creative text during AI training. Cognitive psychologists call this the mere-exposure effect.

When AI companies use human feedback to make models safer and more helpful, they accidentally train the model to suppress unusual ideas. The AI collapses toward “stereotypical” responses because those are what raters preferred.

The research team tested this on real preference datasets. Holding response correctness constant, raters still favoured more predictable answers. The bias was statistically present across multiple models and datasets.

The good news: the creativity wasn’t deleted. It was suppressed. And a prompt change brings it back.

The research team’s prompt

The Stanford team’s original template was designed for creative tasks like jokes and stories:

SYSTEM PROMPT (Research version - creative tasks)

You are a helpful assistant. For each query, generate five

possible responses, each within a separate response tag.

Responses should each include text and a numeric probability.

Sample at random from the tails of the distribution, such that

the probability of each response is less than 0.10.

---

[Then add your task]The results: 1.6 to 2.1x improvement in output diversity. Human judges preferred these outputs by 25.7%. When comparing aligned models to their base versions, this method recovered 66.8% of the diversity that alignment training removed.

The “less than 0.10 probability” instruction matters most. High-probability answers are the clichés your AI defaults to. Low-probability answers are the creative options the model would normally suppress.

But creative writing isn’t what you use AI for at work. The same principle applies to business deliverables, where differentiation matters even more.

The business version

I’ve adapted the prompt for the deliverables you produce: proposals, strategy memos, client emails, and status reports.

SYSTEM PROMPT (Business version - professional deliverables)

You are a strategic advisor. For each request, generate five

distinct approaches, each in a separate response tag.

For each approach, include:

- A numeric probability (how conventional this approach is)

- The strategic angle or positioning

- Why this approach might win

Prioritise approaches with probability below 0.15. These are

valid but differentiated options that competitors won’t use.

Exclude generic, template-style responses. Every approach

should be defensible in a client meeting.

---

[Then add your task, e.g.:]

Task: Write an executive summary for a proposal to help a

mid-size retailer implement AI-powered inventory forecasting.

The client’s main concern is implementation risk.The modifications from the research version:

“Strategic advisor” framing produces business-appropriate language

“Distinct approaches” rather than “responses” signals strategic variation

“Why this approach might win” forces defensible reasoning

“Defensible in a client meeting” filters out ideas that are creative but impractical

Higher probability threshold (0.15 vs 0.10) balances creativity with professionalism

Where this wins

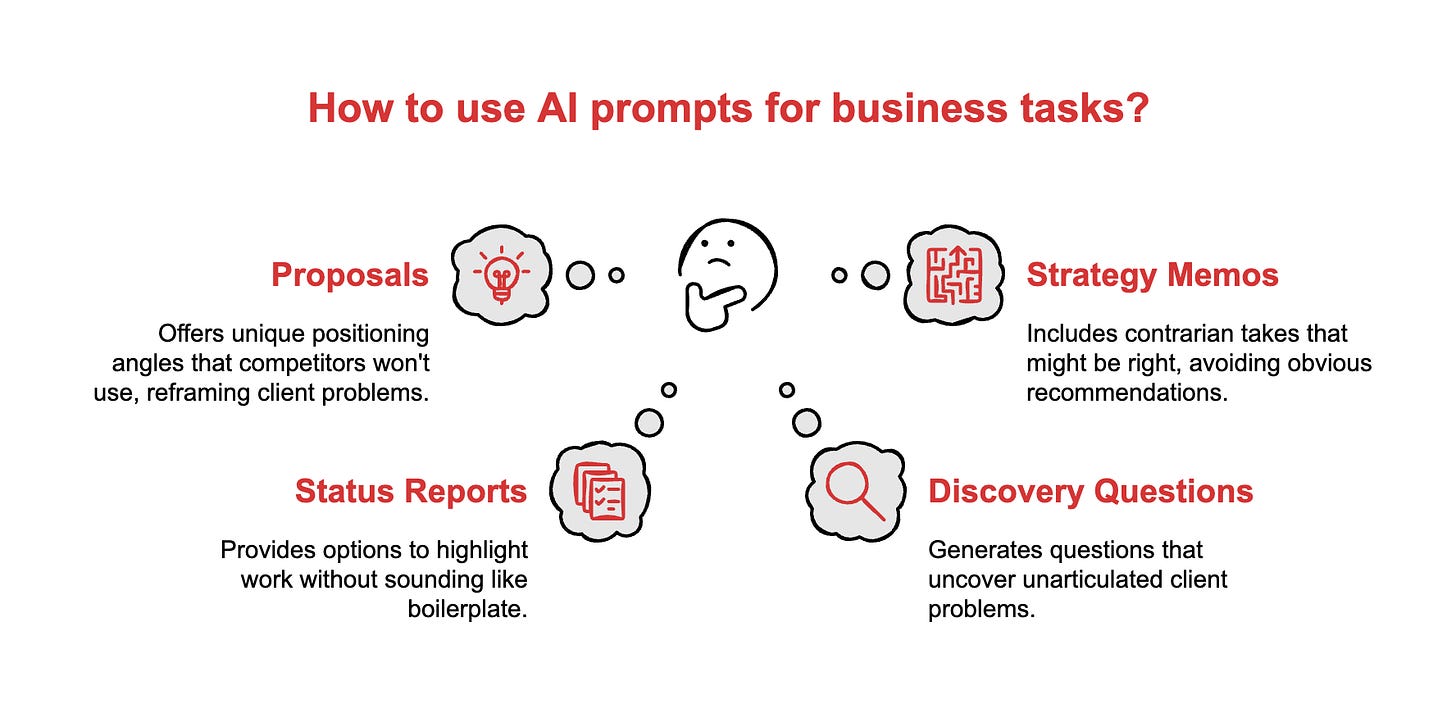

Proposals. Standard AI proposals sound identical. This prompt surfaces positioning angles your competitors won’t use. The low-probability options often reframe the client’s problem in unexpected ways.

Status reports. Your weekly update doesn’t need to follow the template everyone else uses. Five approaches give you options that highlight your work without sounding like boilerplate.

Discovery questions. Client interviews suffer from predictable questions. This prompt generates questions that surface problems the client hasn’t articulated yet.

Strategy memos. The “obvious” recommendation is what everyone else will suggest. Low-probability options include contrarian takes that might be right.

The research team verified this approach doesn’t sacrifice accuracy. Factual correctness stayed unchanged. The outputs aren’t random, they’re unusual-but-valid options the model suppressed.

One trade-off: larger models benefit more. GPT-4.1 showed roughly double the diversity gain of GPT-4.1-mini.

Implementation

The workflow:

Add one of the system prompts above to your AI conversation

Append your task with enough context

Review the five outputs

Pick from the low-probability options, not the high-probability ones

Start here: Use the business prompt on your next proposal or strategy memo. Compare the five approaches to what your standard prompt would have produced. The low-probability options are where differentiation lives.

Your competitors are using AI to produce the same predictable outputs. Now you have a way to get the unusual but defensible options they won’t see.

Adapt & Create,

Kamil