The U.S. Government AI Wastes Billions on Wrong Priorities

Learn how to avoid the same expensive mistakes in your company

Hey Adopter,

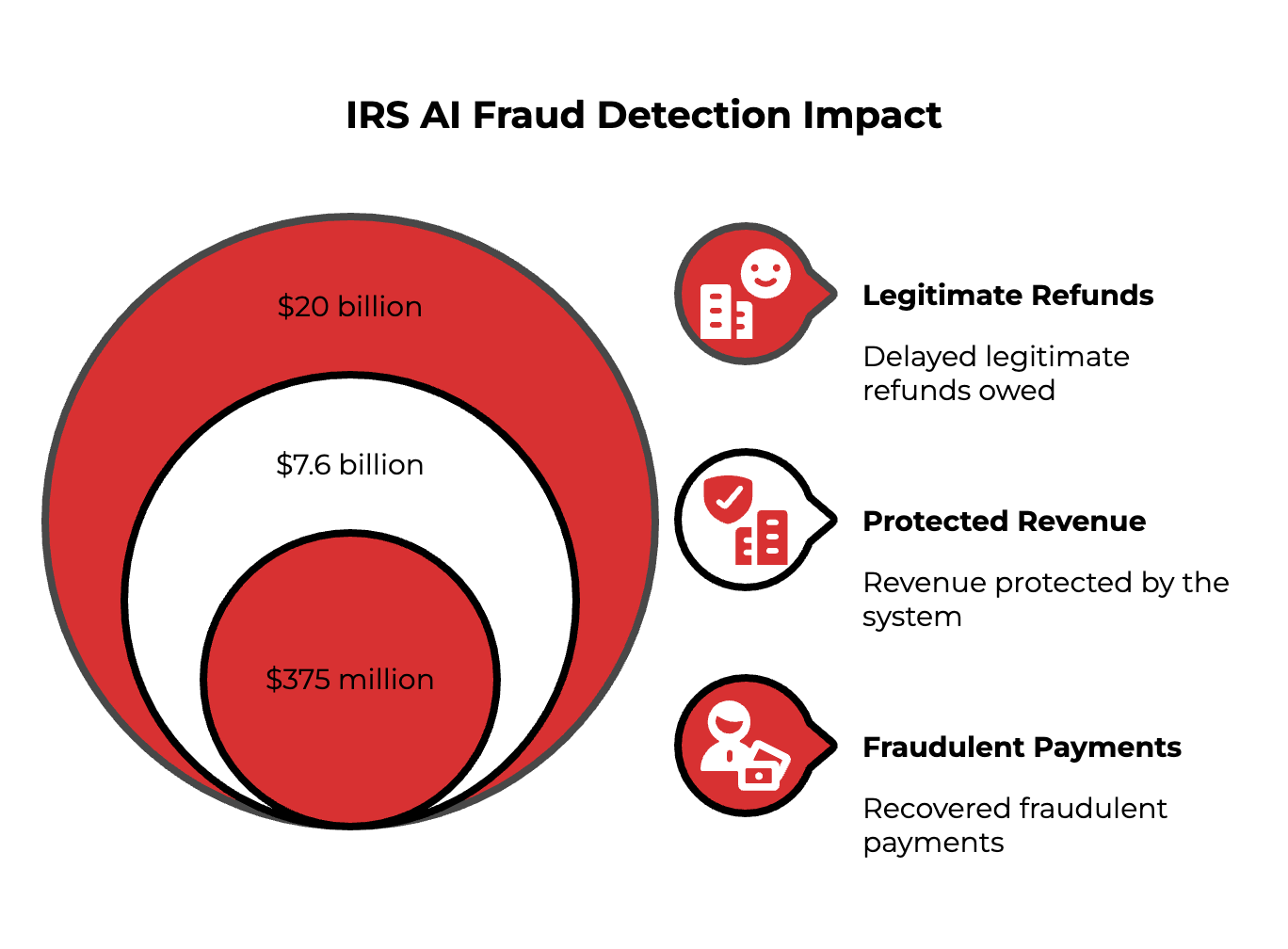

The IRS used AI to recover $375 million in fraud, but delayed $20 billion in legitimate refunds to innocent taxpayers.

Last week, I walked through the halls of the Capitol as a newly minted American citizen, fresh from completing the immigration process that millions navigate each year. The ceremony was efficient, almost algorithmic in its precision. Names called, oaths sworn, certificates distributed.

But my journey to that moment told a different story. Months of navigating USCIS systems that demanded perfect digital submissions, rejected applications for minor inconsistencies, and created two distinct experiences: a fast lane for those who could master the bureaucratic algorithm, and a slow, punitive track for everyone else.

Standing in Washington DC, surrounded by the machinery of government, I couldn't shake the feeling that I'd just experienced the future of public service. And it wasn't entirely reassuring.

When efficiency metrics lie

Government agencies across America are deploying AI at breakneck speed, chasing efficiency gains that look spectacular on paper. Processing times slashed by months. Backlogs reduced by double digits. Costs cut by millions.

But dig beneath the metrics and a troubling pattern emerges. These AI systems are achieving their efficiency targets by systematically shifting costs and burdens elsewhere, creating what experts now call the "AI efficiency trap."

Your organisation is walking into the same trap.

The false positive disaster

The Internal Revenue Service provides the starkest example of this phenomenon. The agency deployed sophisticated AI models to detect tax fraud, and by narrow metrics, they worked brilliantly. The Treasury reported recovering $375 million in fraudulent payments through enhanced AI-powered detection processes.

What the headlines missed was the collateral damage. A Treasury Inspector General report found that the IRS's fraud detection filters had a false positive rate of 81%. While the system protected $7.6 billion in revenue, it did so by delaying nearly $20 billion in legitimate refunds owed to compliant taxpayers.

Think about that ratio. For every dollar of actual fraud caught, the system wrongly flagged $2.60 in legitimate transactions. The AI didn't eliminate the work of fraud detection, it massively amplified it while making innocent taxpayers pay the price.

The burden shift playbook

The pattern repeats across agencies. U.S. Citizenship and Immigration Services (USCIS) achieved remarkable efficiency gains, cutting average case processing times from 10.5 months to 6.1 months. The agency processed a record 10.9 million cases and reduced its decade-long backlog by 15%.

But this success came with a hidden cost. The AI systems are optimised for standardised, easily classifiable data. Applicants with non-standard documents or minor discrepancies face automatic scrutiny and delays. The agency's own guidance now tells applicants to submit "crystal-clear scans" and ensure "absolute consistency" across all documents to avoid algorithmic triggers.

USCIS didn't become more efficient by processing cases better. It became more efficient by making applicants do more work upfront and penalising those who couldn't meet its rigid digital standards.

The metrics that matter

These cases reveal a fundamental flaw in how organisations measure AI success. Both agencies optimised for narrow efficiency metrics while ignoring the broader costs they imposed on their stakeholders.

The IRS measured "fraud dollars recovered" but not "legitimate taxpayer burden." USCIS tracked "processing time" but not "applicant preparation cost" or "equity of outcomes."

Your organisation likely uses similar metrics. Time saved. Costs reduced. Processes automated. But are you measuring what happens to the work that doesn't disappear, just gets moved?

The $2 billion pattern emerges

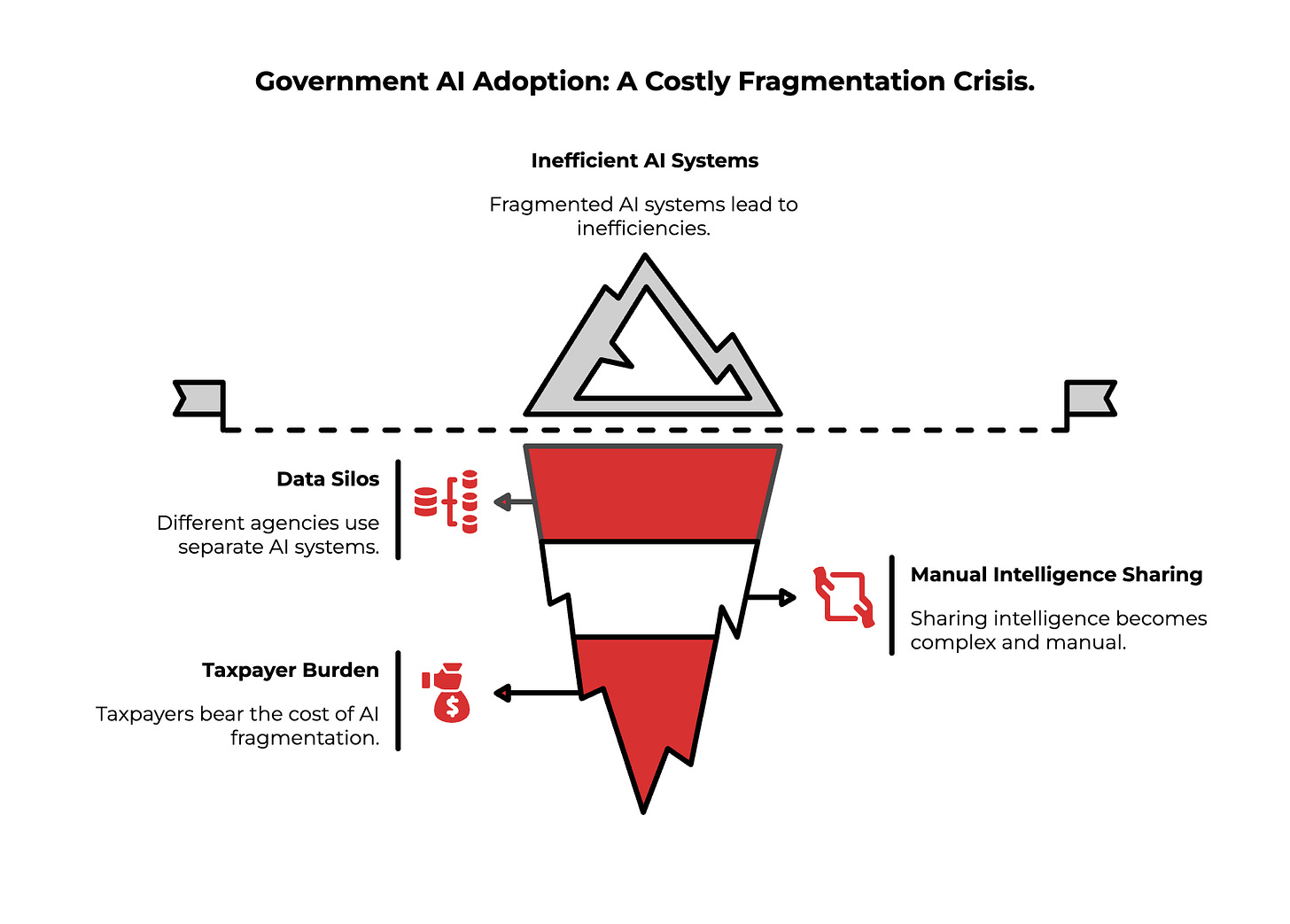

The efficiency trap is just the beginning. Government AI adoption reveals an even more expensive failure mode that's spreading through organisations everywhere.

A Council on Foreign Relations analysis found that the Department of Justice maintains 12 different licence plate reader systems and nine separate AI systems for audio and video transcription across its various components. When the FBI, DEA, and ATF each use different systems for the same task, sharing crucial intelligence becomes a complex, manual process.

This fragmentation crisis is costing taxpayers billions and creating the exact inefficiencies AI was meant to solve. But the lessons for private sector leaders are profound, and the solutions are clearer than you might expect.

Want the full analysis? Download our complete case study report covering:

The $2 billion fragmentation crisis and how to avoid it in your organisation

Why 75% of government workers say AI made their jobs harder, not easier

The six-phase integration blueprint that actually works

Strategic frameworks for measuring AI success beyond efficiency metrics

The "human-in-the-loop" workforce model that prevents algorithmic disasters

Download the full report - it's essential reading for anyone deploying AI at scale.