How to Use Sora 2 to Create Your Own Marketing Videos (Without Hiring Anyone)

Six tools, one morning, zero corporate budget. Copy my exact workflow. Build your own ads. Takes less than an hour.

Hey Adopter,

You’re about to see how a 45-minute morning session, interrupted by making eggs for my two kids, produced a video ad that people actually shared. Karo (Product with Attitude) asked me to consider making a tutorial so here we are. Daria Cupareanu Jenny Ouyang and Claudia Faith liked it too :)

First of all, below is the ad. Go ahead and like and share it, please 🙏

The slop problem (add me below to create some 😂)

Sora 2 launched and the internet flooded with AI-generated videos. Most of them look like tech demos. Shiny. Polished. Utterly forgettable.

The pattern repeats with every new AI tool. Release happens. Everyone generates content. Nobody directs it toward an actual business outcome.

I wanted to test whether you could skip the slop and go straight to something your audience would remember.

One shot vs. fifteen tries

Five of my six scenes generated perfectly on the first attempt. The sixth took fifteen iterations because I couldn’t land the tone for the closing line.

That ratio matters. When people see “AI-generated” content, they assume either magic (one prompt, perfect output) or slog (endless tweaking until something works). The reality splits the difference. Most of the work succeeds quickly if you structure your prompts as self-contained units. The final 10% eats half your time.

Each scene needed to stand alone. Sora 2 doesn’t maintain context between prompts, so you can’t write “transition to a smoky steel mill” and expect it to remember the previous scene. You describe each shot as a complete visual moment: subject, setting, action, period details.

The scenes that worked immediately:

Opening: printing press, historical setting, me walking through it

Industrial Revolution: factory floor, steam engines visible

Computer era: 1980s office, me in period-appropriate clothing

Modern office: contemporary setting, natural lighting

The scene that didn’t: closing shot where I deliver the final line. The lip sync kept failing, or the composition felt sterile, or the branding elements looked forced. Fifteen attempts to get one seven-second clip right.

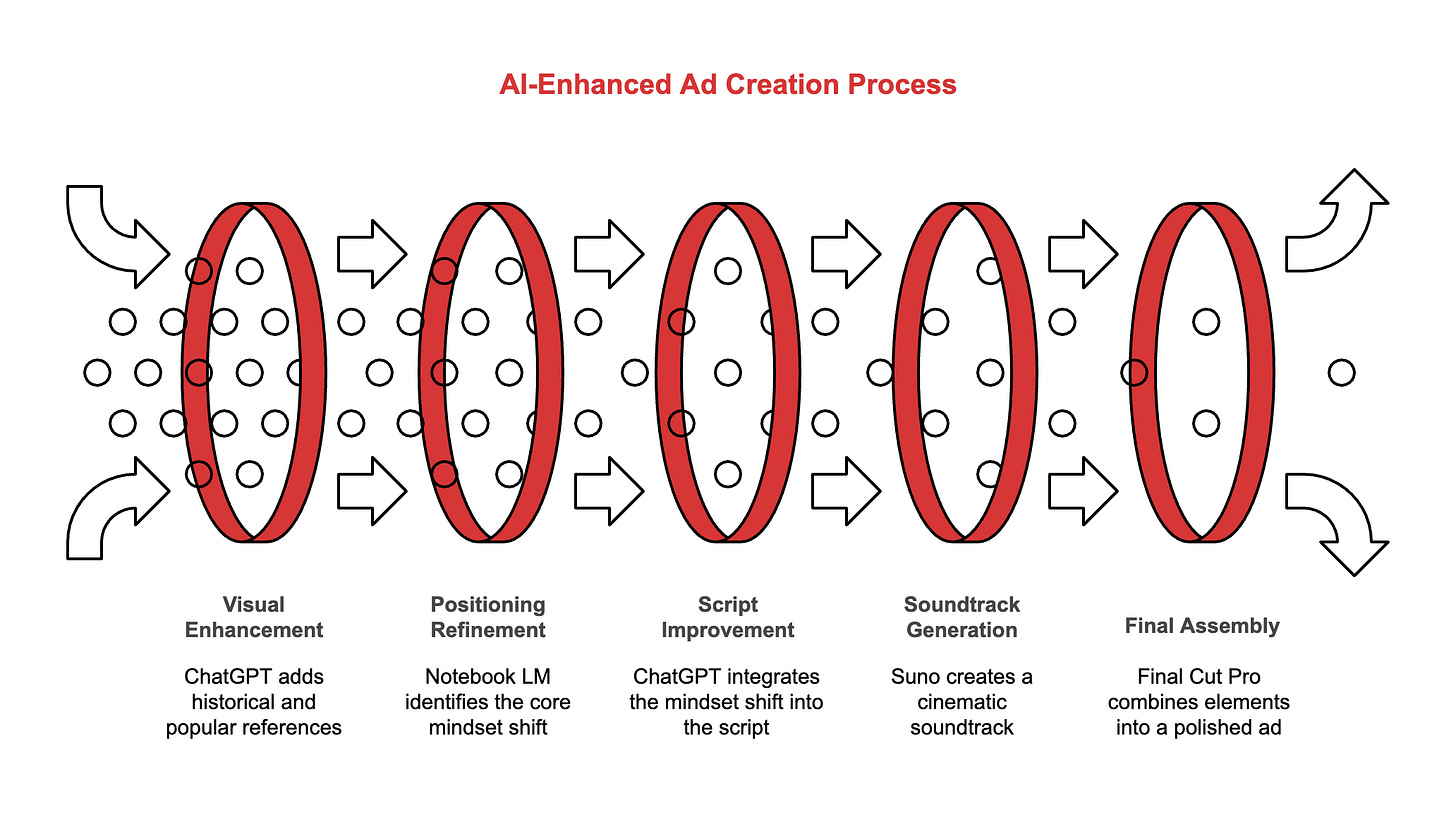

The stack that replaced an agency

The stack that replaced an agency

ChatGPT wrote the script structure. I started with this:

I want to create a 30-second trailer or Facebook ad featuring me, Kamil, walking through different scenes, talking about the concerns of my target audience, the opportunity, and then the reason why they should join the AI Adopters Club. I want to do that in a bunch of different scenes, six scenes, each four to seven seconds long. Give me a description of a continuous script with every scene being completely different, simply described in one or two sentences per scene, but always featuring me as I walk through the scene to make a cohesive ad with different outfits and everything aligned to AI and business and me as a mentor to my target audience.It returned something usable but generic. So I pushed further:

How could this be a little bit more visually interesting? Maybe with historical and popular references? Those references show how history rhymes when people adapt to technology.Better. But still missing the core positioning of my newsletter.

Notebook LM solved that gap. I fed it two-thirds of my newsletter archive and had ChatGPT generate a question that would extract my positioning:

Ask me a question that will help you understand more about what I talk about in the AI Adopters Club.ChatGPT returned this:

What’s the single biggest mindset shift you’re trying to create in your audience? What do you want them to stop believing about AI at work? And what do you want them to start believing instead?I took that question to Notebook LM, which synthesized months of writing into one paragraph about the transition from viewing AI as a passive tool to understanding it as something that requires active direction. Then back to ChatGPT:

With this answer in mind, try to improve the script above.

[Paste the Notebook LM response here]The revised script had the positioning locked in. The historical references weren’t decorative anymore. They reinforced the central message: the tools change, but the winners are always the ones who direct the work.

Suno generated the soundtrack from this prompt:

What type of epic soundtrack would work for this as background music? Instrumental only, but not typical corporate.I took the description ChatGPT provided, refined it slightly, and dropped it into Suno. Cost: $10 for a subscription that produces hundreds of tracks. I use it to make songs for my kids at breakfast. You type “song about eating your eggs” and 30 seconds later you have something that makes them laugh and finish their food.

Final Cut Pro handled assembly. Drop the Sora clips on the timeline, add simple transitions, overlay the Suno track, drop in a few 11 Labs sound effects for the scene changes. I added period-correct visual treatments: old film grain for the printing press, slight CRT distortion for the computer scene. Total edit time: maybe 15 minutes.

Why most people generate slop instead

Three mistakes kill 90% of AI-generated content:

Prompting without structure. People write one long paragraph describing everything they want and wonder why the output feels scattered. Each prompt needs a single, complete instruction. Subject. Action. Setting. Tone. If you’re making multiple assets, each prompt works in isolation.

Skipping the feedback loop. The first draft from any AI tool is a starting point. You ask for revisions. You test variations. You use one tool to critique another. ChatGPT writes a script, Notebook LM refines the positioning, you take that refinement back to ChatGPT. The iteration is where quality emerges.

Accepting the default aesthetic. Every AI tool has a house style. Sora tends toward a certain cinematic look. Suno has recognizable production patterns. The outputs feel generic because people stop at “generate” and skip “direct.”

You add specificity. Period details. Visual references. Brand elements. The tools give you raw materials. You shape them into something that serves a business purpose.

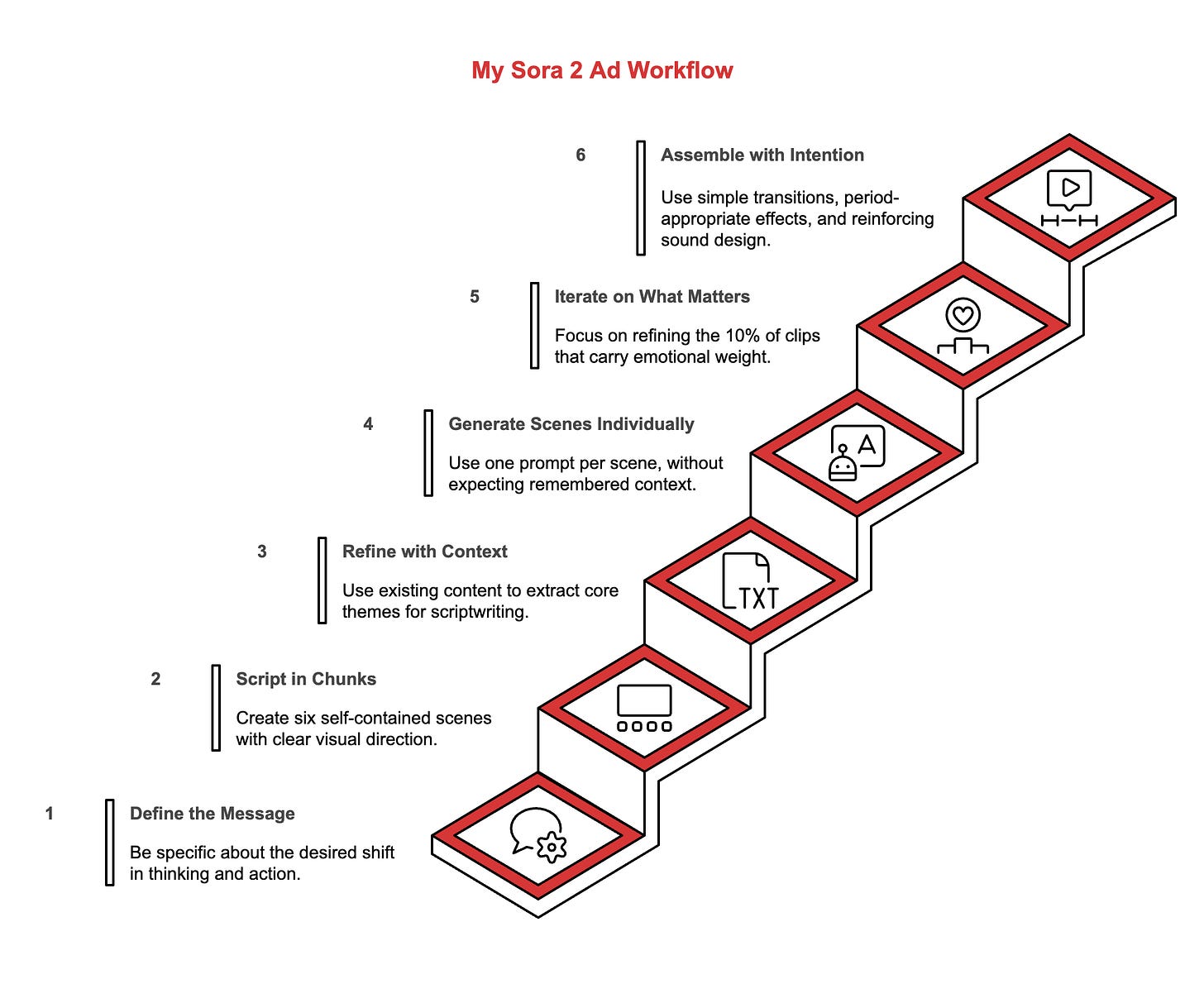

The workflow, step by step

Define the message. Not “make a video about AI.” Be specific: what shift in thinking do you want to create? What action do you want people to take after watching?

Script in chunks. Six scenes. Each self-contained. Each with clear visual direction. Each connected by a throughline but workable as standalone units.

Refine with context. If you have existing content, use Notebook LM to extract your core themes. Feed those themes back into your scriptwriting tool.

Generate scenes individually. One prompt per scene. Don’t expect the tool to remember previous context.

Iterate on what matters. Accept that most clips will work quickly. Spend your time on the 10% that carry the emotional weight.

Assemble with intention. Simple transitions. Period-appropriate visual effects. Sound design that reinforces the message.

Total time from concept to finished asset: 45 minutes, interrupted by breakfast prep.

What changes when you direct instead of generate

The ad got shared. People who saw it asked how long it took to make. Some assumed days of work. Others thought I hired a production team.

Nobody thought “generic AI slop.”

The difference: every choice served the message. The historical references weren’t decoration. They built the argument that technology always rewards people who direct it, not just use it. The visual progression moved from past to present to future. The closing line landed because I iterated fifteen times until the delivery felt right.

You can replicate this workflow for product demos, explainer videos, client pitches, social ads. The tools are accessible. The cost is measured in subscriptions, not agencies.

Tools used in this workflow

Sora 2 (AI video generation)

Also accessible at sora.com when signed in with OpenAI credentialsSuno (AI music generation)

$10/month subscriptionChatGPT (Script development and creative direction)

$20/month for Plus tier with Sora 2 access | Product infoNotebook LM (Google AI notetaking and research)

FreeFinal Cut Pro (Video editing, Apple)

One-time purchase | Latest featuresEleven Labs (AI audio, voice, and sound effects)

Starting at $5/monthTotal monthly cost for subscriptions: $35. Total time: 45 minutes.

The constraint isn’t the budget. It’s whether you’re willing to direct instead of just prompt.

Next time you see AI-generated content that feels empty, ask: did someone direct this toward an outcome, or did they just hit generate and walk away?

That’s the gap between slop and strategy.

Adapt & Create,

Kamil

Kamil Banc's "How to Use Sora 2 to Create Your Own Marketing Videos" is the best detailed instructions I have seen! Thanks so much Kamil. This will help a lot of people including me. I have been working with AI for a few years, but my video editing lacks any expertise – your detailed instructions give me hope. ☺️

Love it... How did you get it to look like you? Did you upload images, or is there a description of you in ChatGPT knowledge? How about the voice - is there a way to get it closer to your own?