Reddit called OpenAI's new prompts 'garbage'. They're right.

Generic prompts without context produce generic output. Here's the alternative no one's teaching.

Yesterday’s article covered GPT-5’s routing system: how the model picks reasoning depth and output length separately, and why your outputs got shallow or bloated. Today’s article shows you what structure those routing controls should process.

Use both. Yesterday’s article made the engine work harder. Today’s article gives it better fuel.

Hey Adopter,

OpenAI just released “Prompt Packs” for every job function. Reddit tore them apart in 48 hours.

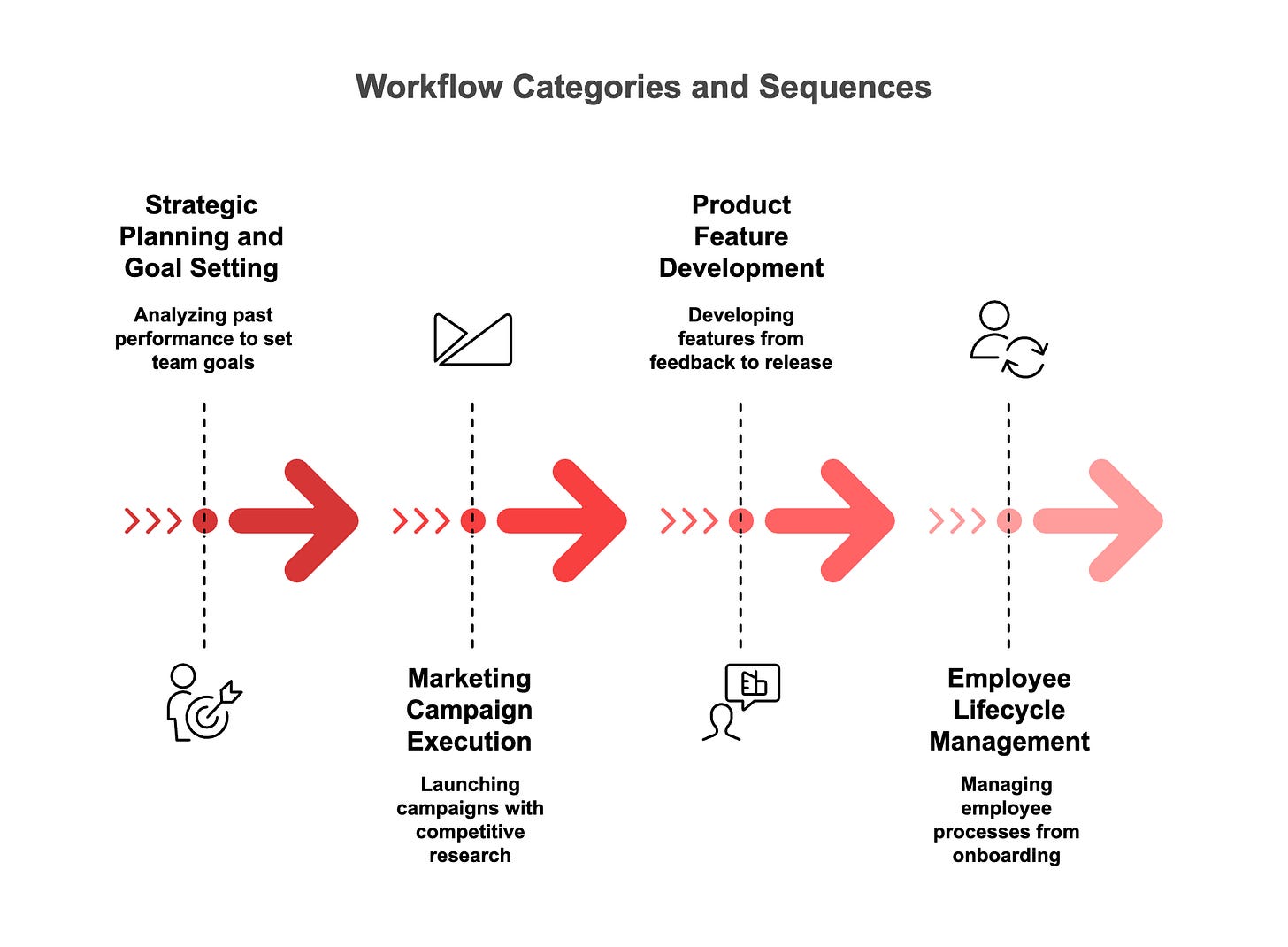

This article shows you why those prompts fail and what actually separates useful AI output from generic slop. You’ll see one working example of the C.O.R.E. model (the four-component framework that transforms vague requests into sharp, contextual prompts). The full guide gives you ready-to-use workflow prompts for strategic planning, marketing campaigns, product development, and more, plus the context engineering techniques that keep AI conversations sharp across 50 messages instead of degrading after three.

I built this guide because OpenAI’s snippets are exactly what’s wrong with how most people approach prompt engineering. Treat it like a copy-paste exercise and you get copy-paste results. The real skill is workflow thinking, context architecture, and question layering. This guide teaches you those fundamentals instead of handing you another template library.

The launch that landed flat

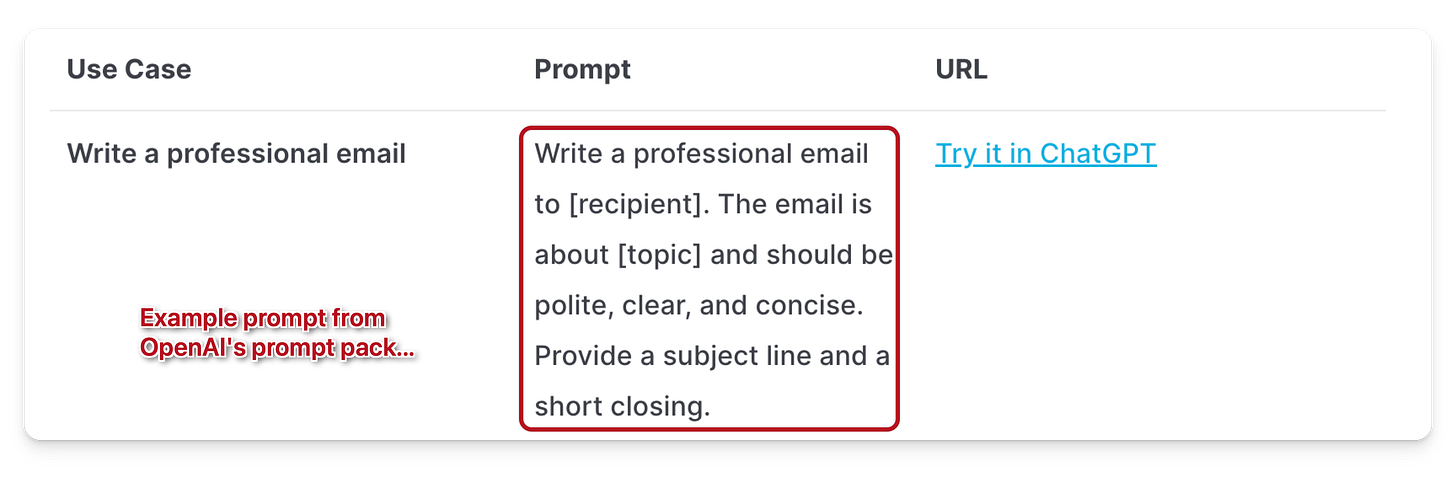

OpenAI dropped curated prompt libraries for sales, HR, engineering, marketing, and nine other functions. Plug-and-play templates designed to save hours of work. The reaction was swift: “pretty garbage”, “basic”, “low quality prompts”, “terrible because there are already people who take whatever AI spits out”.

One systems specialist summarised the core issue: prompts built on personal ego rather than customer needs produce poor products. An engineer noted these might work for beginners, but anyone trying to differentiate will “just sound like everyone else and have people skip over your email slop.”

The real problem is not that the prompts exist. It’s that they treat prompt engineering as a copy-paste exercise rather than a structured thinking discipline.

Why generic prompts guarantee generic output

A sales outreach prompt with zero situational context produces the same mediocre email as everyone else using that prompt. Marketing copy that ignores your actual positioning sounds like it came from a template, because it did.

The issue is not whether AI can write. It’s whether you know how to make AI write something worth reading. That requires three things the Prompt Packs skip: workflow thinking, context architecture, and question layering.

Most people treat AI like a vending machine. Press button, get result. Professional work is a chain. You analyse competitors, build messaging strategy, write copy, create sales talking points. Each step builds on the last. When you treat these as separate tasks, you manually carry context between prompts. The AI forgets details. Your strategy drifts.

Workflow thinking means treating your project as one continuous conversation. You give context once at the start. Each output becomes input for the next step. The AI remembers what you’re trying to accomplish.

One prompt that actually works

Here’s a contrast. Instead of “write a competitive analysis”, try this structure:

Context: We’re a B2B SaaS company selling project management software to construction firms. We’re losing deals to BuildRight.

Objective: Generate three distinct competitive battlecard angles our sales team can use to counter objections about our pricing.

Role: Act as a seasoned competitive intelligence analyst with deep expertise in SaaS sales strategies.

Examples:

Good: “While BuildRight offers a lower entry price, our all-inclusive model delivers 20% lower total cost of ownership.”

Bad: “We are better than them.”

This is the C.O.R.E. model: Context, Objective, Role, Examples. Four components that shift output from generic to useful. It tells the AI who you are, what you need, what expertise to apply, and what good looks like.

The question layer technique goes further. Before asking for final output, embed thinking steps:

Analytical: Identify the top five decision criteria. Assign weights.

Critical: For each option, name the single greatest hidden risk.

Procedural: For your recommendation, outline the first three implementation steps.

Final output: Present analysis in a comparison table with weighted criteria, followed by a one-paragraph executive summary.

You’re forcing the AI to show its work. To consider multiple angles. To build up to the answer instead of jumping straight there.

Download the full guide

Inside: C.O.R.E. model, question layering, context engineering, 14 workflow prompts (strategy, marketing, product, HR), and troubleshooting.

Stop collecting templates. Start understanding how this works.

Adapt and Create,

Kamil

Thanks Kamil, great article. And yet another reminder that Reddit does not suffer BS (or grifters!) 🙏

It’s an interesting feedback loop. I’ve seen people sell prompts and templates and it’s interesting to see OpenAI in this position lol. As a first mover (releasing ChatGPT), we expect them to be the arbiter of good prompts.

It makes me wonder who is.