How People Use ChatGPT? What 700 Million Users Reveal

The data shows two types of users. One group is crushing it.

Hey Adopter,

Here's what I'm going to show you in the next few minutes: why some people get incredible results from AI while others waste their time on busy work that doesn't move the needle.

The difference isn't what tools they use or how much they spend. It's how they think about the problem AI actually solves.

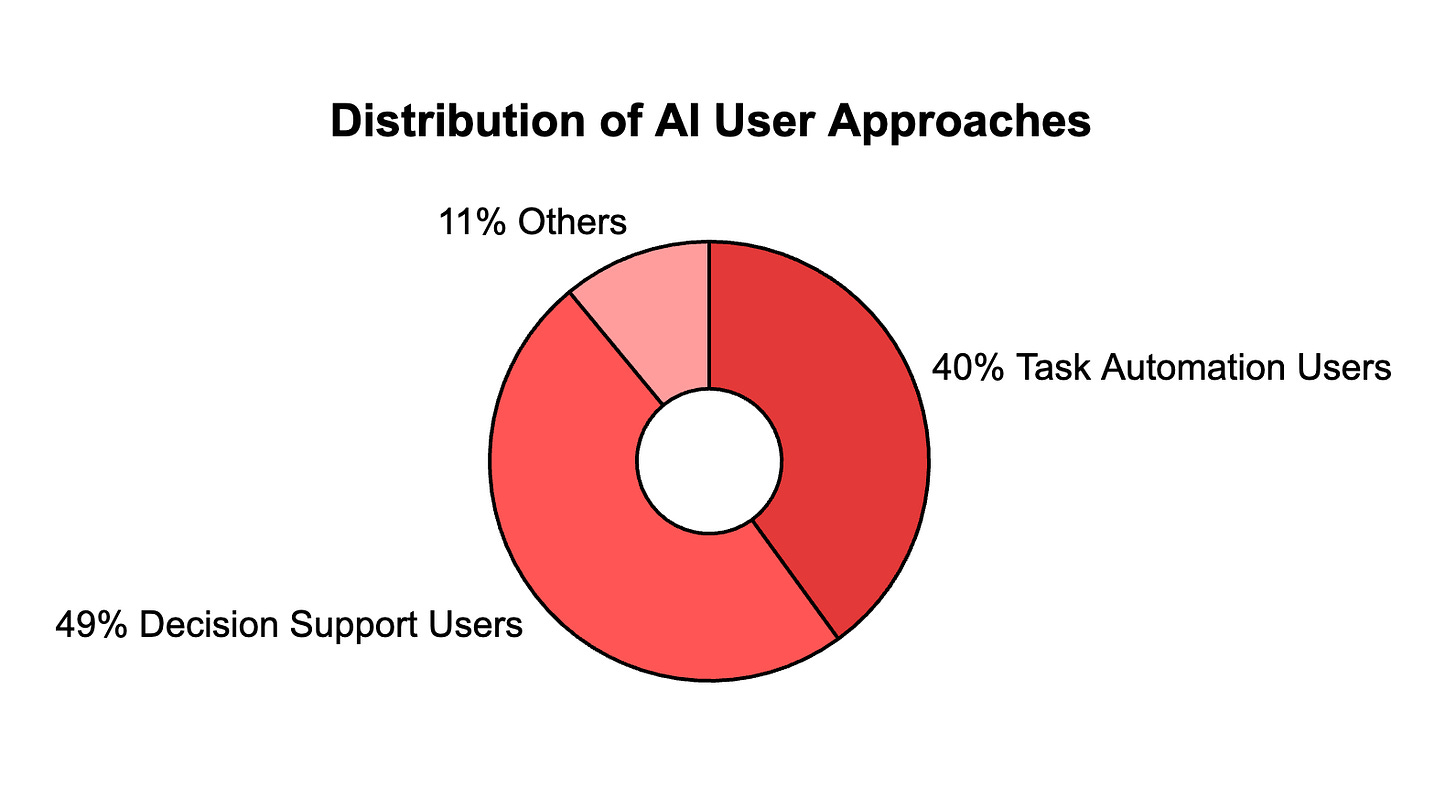

New research tracking 700 million ChatGPT users through July 2025 just revealed a massive gap between two types of AI users. One group treats AI like a task-completing robot. The other treats it like a thinking partner.

Download the paper here

The thinking-partner group gets significantly better results. They report higher satisfaction with their AI interactions. Their usage patterns are growing faster. And here's the kicker: they tend to work in higher-level jobs and earn more money.

This isn't about being smarter or having better access to technology. It's about understanding what AI is actually good at versus what most people think it's good at. The gap between these two approaches is widening, and if you're on the wrong side of it, you're falling behind without realizing it.

Here's exactly what the data reveals about who wins with AI and why.

Users in highly-paid professional occupations are substantially more likely to use ChatGPT for "Asking" rather than "Doing" messages. The gap isn't small. It's a chasm that reveals two completely different approaches to AI.

The sophistication split

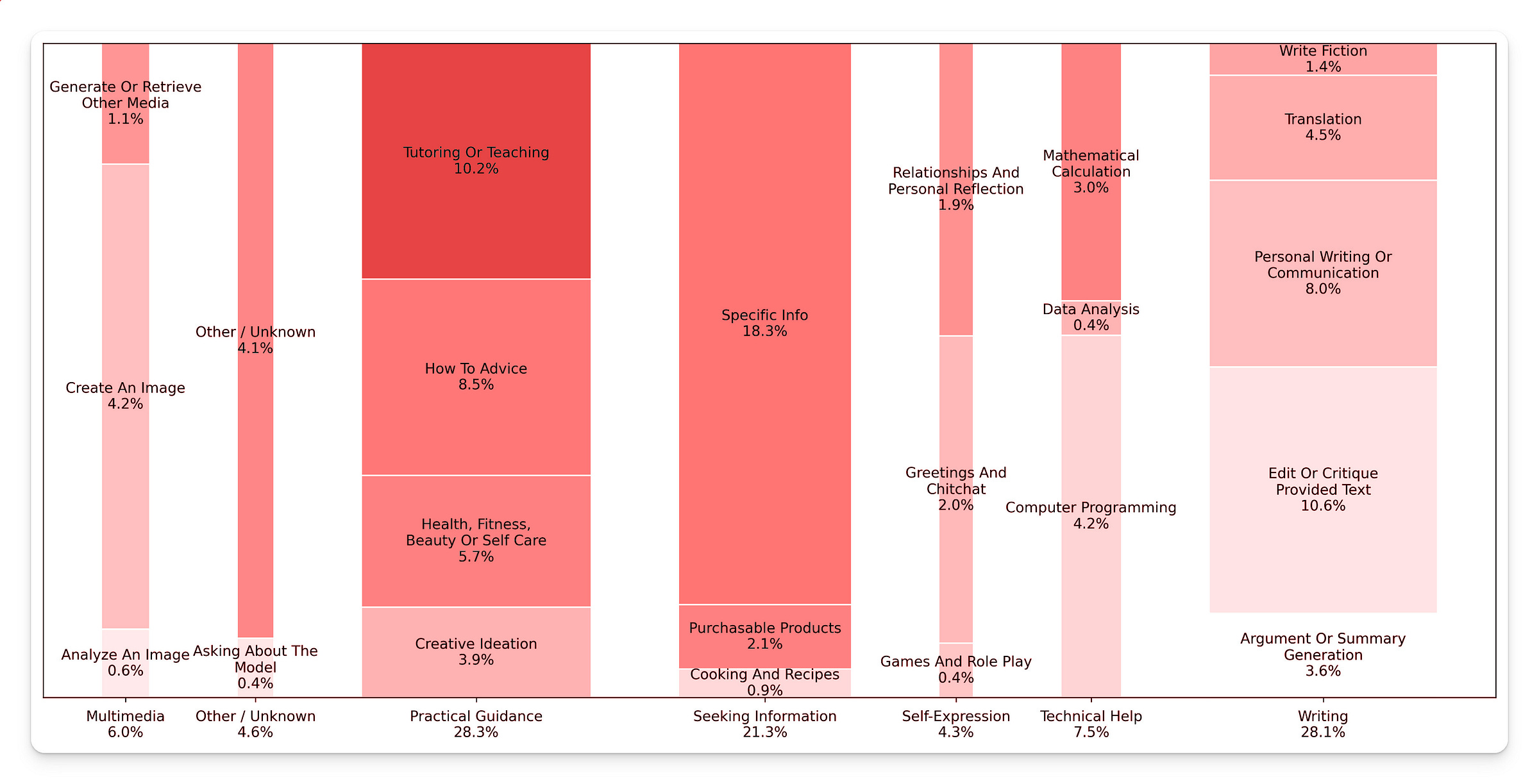

Here's what the 700 million conversations actually look like when broken down by user sophistication:

The task automation crowd: They ask AI to write emails, generate reports, create presentations. They want output they can copy and paste directly into their workflow.

The decision support crowd: They ask AI to help them think through problems, analyze information, and improve their reasoning. They want better inputs for decisions they'll make themselves.

The quality difference shows up in the data. "Asking" messages (seeking advice and information) score consistently higher on user satisfaction than "Doing" messages (task completion). They're also growing faster, increasing their share of total usage over the past year.

But here's what makes this finding reliable: this isn't survey data or speculation. The researchers analyzed actual behavior from real conversations, and their computer system could identify work versus personal use more accurately than human reviewers looking at the same messages.

The sophisticated users figured out something the task-automation crowd missed.

What the data tells us about results

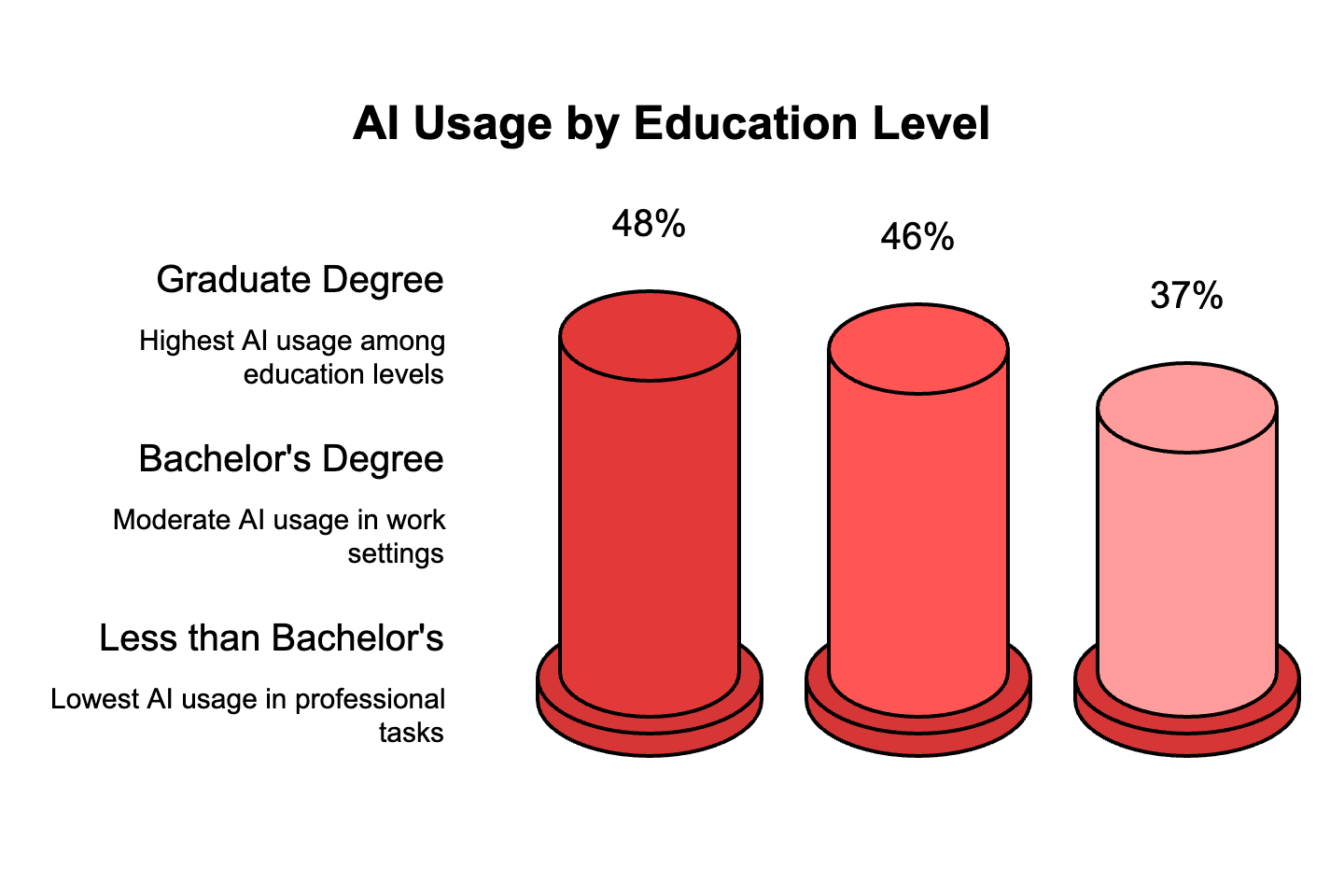

Users with graduate degrees are significantly more likely to send "Asking" messages than users with less education. The same pattern holds for professional occupations versus administrative roles.

This isn't about access or technical skills. It's about understanding where AI actually delivers value.

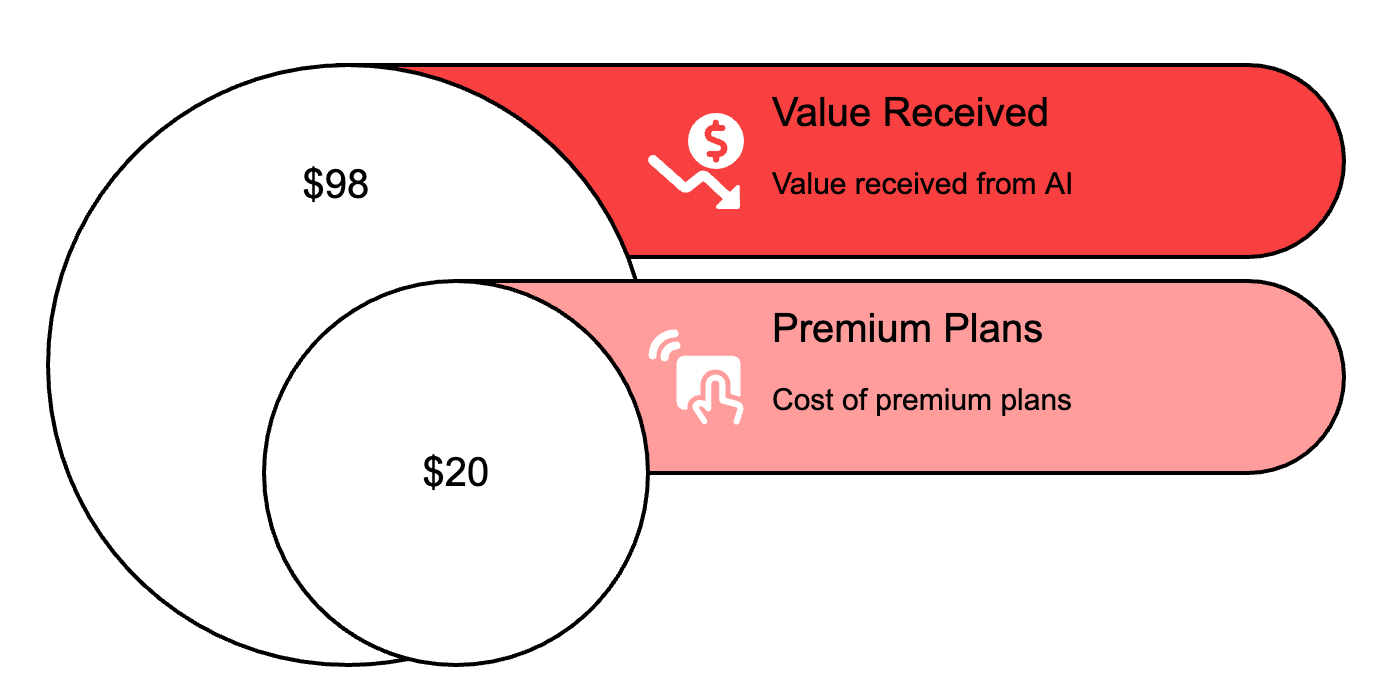

The $98 monthly figure isn't just what people say they'd pay. It's what economists measured people actually getting in value from using AI for decision support.

To me, this number is really low. I’d happily pay $2000/month based on the value that I get from it, but I realize that I’m an outlier. (Also, I hope OpenAI is not listening :D)

Combined with research showing $97 billion in total value Americans get from AI, this proves that treating AI as a consultant creates real economic benefit at massive scale.

Meanwhile, technical help usage dropped from 12% to 5% over the past year. Programming automation tools are losing ground while general consultation platforms grow. Your competitors obsessing over code generation are optimizing for a shrinking segment.

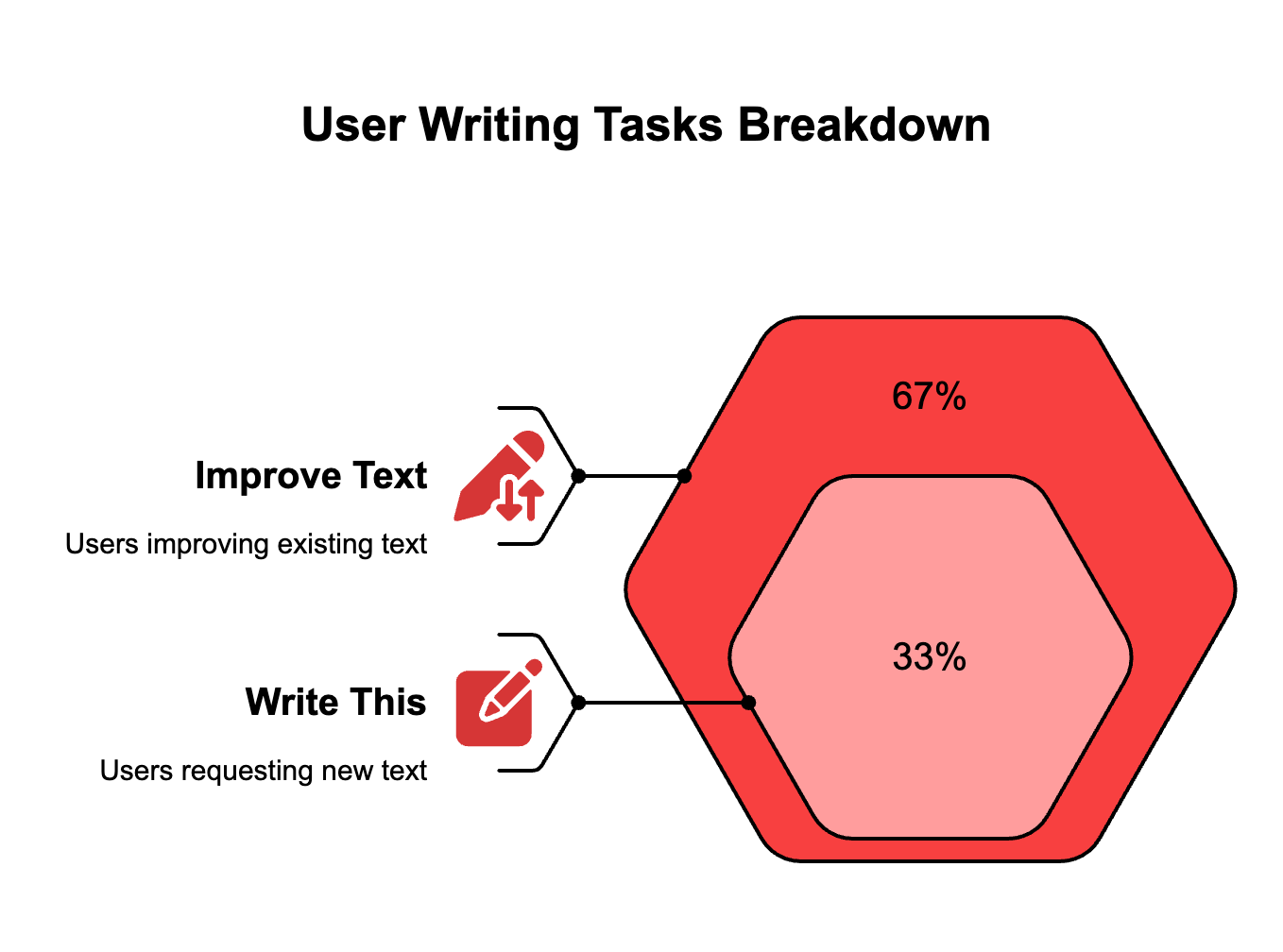

Consider the writing category, which accounts for 42% of all work-related ChatGPT usage. Two-thirds of those conversations aren't asking AI to create something new. They're asking it to improve something that already exists.

The difference matters more than most people realize. When you ask AI to write your report, you get generic output constrained by the tool's training patterns. When you ask AI to critique your draft and suggest improvements, you get feedback tailored to your specific thinking.

The real competitive advantage

The research maps ChatGPT usage to specific work activities. Here's what sophisticated users actually do: 81% of their work-related AI conversations fall into just two categories.

Information processing: getting, documenting, and interpreting data.

Decision support: analyzing problems, solving challenges, thinking creatively.

Notice what's missing from that list. Task completion. Process automation. The stuff most AI training focuses on.

The sophisticated users treat AI like a research partner, not a virtual assistant. They're not trying to eliminate their judgment. They're trying to improve it.

Why most AI advice gets it backwards

Every productivity guru tells you to find tasks AI can do for you. The actual usage data from 700 million people suggests the opposite approach works better.

Find decisions AI can help you make.

The users getting the highest satisfaction scores aren't asking "What can AI do for me?" They're asking "How can AI help me think about this differently?"

That shift in approach explains why their messages score higher on quality metrics. It explains why "Asking" usage is growing faster than "Doing" usage. It explains why professional knowledge workers use AI differently than administrative workers.

They discovered that AI's value isn't in replacing human judgment. It's in enhancing it.

The practical takeaway: Stop using AI to avoid thinking. Start using it to think better.

The sophisticated users already figured this out. The gap between their results and everyone else's is only going to widen.

Adapt & Create,

Kamil

Kamil you make great post man. Mine sucks compared to yours

I'll agree with this, I refer to any AI model I use as my Reflective Amplification Principle (RAP) partner. It certainly helps in brainstorming sessions.