Get Ahead of Trump's Massive AI Policy Shift

The insider breakdown that gives you a real competitive edge

TL;DR: President Trump's July 2025 AI Action Plan throws out climate concerns, bans "woke AI" from government, and promises to build infrastructure faster than physics allows. It's either the boldest tech strategy in decades or a recipe for expensive chaos.

Hey Adopter,

The 28-page document reads like a declaration of war, not a policy paper. "Whoever has the largest AI ecosystem will set global AI standards and reap broad economic and military benefits," it declares in the opening pages.

Translation: This isn't about making ChatGPT better at writing emails. The administration sees AI as the ultimate winner-take-all contest that determines global power for the next century.

Download the full document here 👇

The "build fast, regulate never" philosophy

Regulations slow down innovation, so we're getting rid of them. All of them.

The plan doesn't just trim red tape. It takes a chainsaw to it. Every federal agency gets marching orders to identify and eliminate any rule that might slow AI development. The FTC gets told to review all Biden-era investigations for "theories of liability that unduly burden AI innovation."

"Restricting AI development with onerous regulation would not only unfairly benefit incumbents… it would mean paralyzing one of the most promising technologies we have seen in generations," Vice President Vance argued at the Paris AI Action Summit.

But here's where it gets spicy. States with "burdensome AI regulations" could lose federal funding. California and New York, with their comprehensive AI laws, are essentially being told to change course or lose cash. The administration is betting that financial pressure will override state sovereignty on tech policy.

The legal challenges alone will take years. Constitutional scholars are already raising eyebrows about using federal funding as a weapon against state policy choices. But the message is clear: regulation is the enemy of progress.

Enter the war on "woke AI"

AI systems should give you facts, not lecture you about social justice.

This might be the most controversial section in the entire document. The administration demands that federal agencies only buy AI systems that are "free from ideological bias and be designed to pursue objective truth rather than social engineering agendas." They want NIST to scrub its AI Risk Management Framework of any mention of misinformation, diversity, equity, inclusion, or climate change.

Think about what this means in practice. If you're selling AI to the government, your system can't flag potentially harmful content as misinformation. It can't account for racial bias in criminal justice algorithms. It can't consider climate impacts in infrastructure planning.

The plan even calls for evaluating Chinese AI models for "alignment with Chinese Communist Party talking points." Apparently, ideological bias is only bad when it comes from the other side.

Government contractors are now caught between contradictory demands. Build AI that's completely neutral (impossible) while also explicitly rejecting certain viewpoints (ideological). Good luck threading that needle.

Infrastructure promises that defy physics

We need to double America's energy capacity to power AI data centers, and we need to do it fast.

Here's where ambition crashes into reality. The plan calls for streamlining environmental reviews, opening federal lands for data centers, and keeping every power plant online regardless of age or efficiency. The motto is literally "Build, Baby, Build!"

But the numbers don't work. Current US data centers consume 147 terawatt-hours annually. Projections show this could hit 606 TWh by 2030, nearly 12% of all electricity. To put that in perspective, that's equivalent to adding another Texas to the grid.

The administration promises to build as much new electricity capacity as China in a single year. China has been building energy infrastructure at breakneck speed for decades with massive state resources and few environmental constraints. The US permitting system, even streamlined, typically requires 5+ years for major projects.

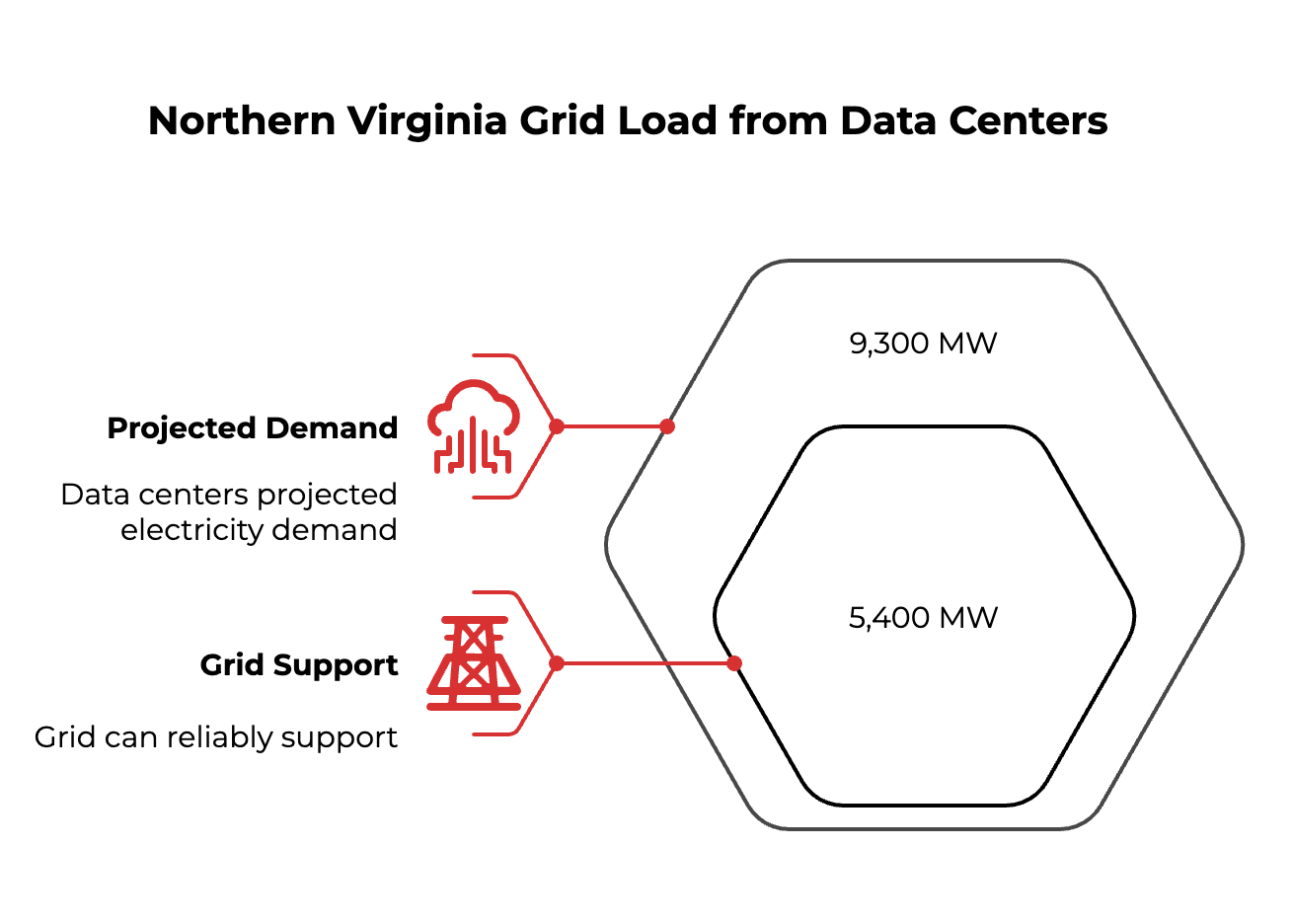

The plan acknowledges that some regional grids literally cannot handle projected AI demand. Northern Virginia already represents 24% of grid load from data centers, but could need 9,300 MW by 2028 when the grid can only reliably support 5,400 MW.

Physics doesn't care about policy timelines.

Open source gets a massive push

America should lead in AI models that anyone can download and modify, not just closed systems like GPT-4.

This is actually smart strategy disguised as technical policy. Open-source AI models can run on your own servers with your own data. No sending sensitive information to OpenAI or Google. No dependence on API access that can be cut off.

For businesses dealing with confidential data, proprietary information, or regulatory requirements, this matters enormously. Open models mean you can comply with GDPR without sending European customer data to Silicon Valley. You can run AI on classified government networks. You can modify the model for your specific industry needs.

The plan pushes for financial markets in compute power, spot and forward contracts like commodities trading. This could democratize access to the massive computing resources needed for AI development. Right now, only Google, Microsoft, and Amazon have enough compute to train frontier models. Opening that up changes the game completely.

But there's a security tradeoff. Open models can be downloaded by anyone, including bad actors who want to build bioweapons or launch cyberattacks. The plan acknowledges this tension but lands on the side of openness.

What this means for your business

The policy shifts create winners and losers across multiple industries. Here's where to focus your attention:

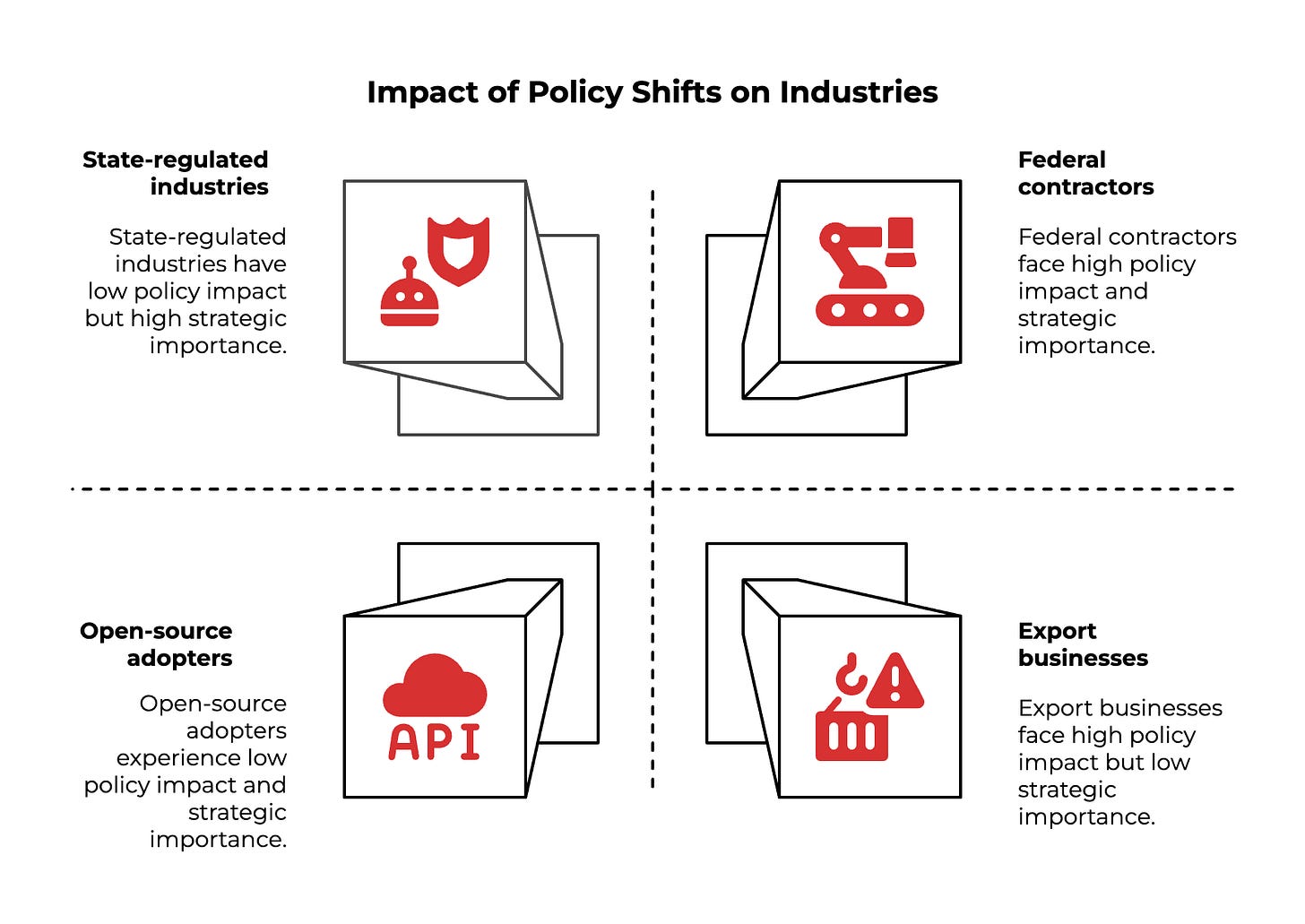

Federal contractors need to start mapping AI systems against new "objective and unbiased" requirements. If your AI flags certain content as problematic, expect pushback. Document your model's decision-making process because you'll need to prove ideological neutrality.

Energy-intensive operations should plan for data center construction getting easier but power costs heading up. Demand response programs and behind-the-meter generation become essential. Long-term power purchase agreements are about to get very expensive.

State-regulated industries must watch for federal-state conflicts over AI rules. California's AI safety requirements could clash with federal procurement standards. You might need separate systems for different jurisdictions.

Open-source adopters face their biggest opportunity yet. Federal agencies will prioritize vendors who can run models on-premise. Start building capabilities around open-weight models like Llama and Mistral now.

Export businesses need compliance program upgrades. Chip and AI service export controls are tightening. Location verification requirements for semiconductors are coming.

The worker promise that nobody believes

"AI will improve the lives of Americans by complementing their work—not replacing it," the plan promises. But it provides exactly zero binding mechanisms to ensure this outcome.

The document establishes an "AI Workforce Research Hub" and calls for retraining programs, but offers no protections against job displacement. The focus on removing regulatory barriers and accelerating adoption could outpace workforce adaptation programs by years.

Historical precedent suggests this optimism is misplaced. Previous technological revolutions displaced workers faster than retraining could accommodate them. The plan's emphasis on speed over worker protection reveals its true priorities.

My take: Smart strategy wrapped in political theater

Strip away the partisan rhetoric and contradictory demands, and you'll find something surprising: several genuinely brilliant strategic moves buried in this mess.

The open-source push is transformative. Breaking the compute oligopoly through financial markets could democratize AI development in ways we haven't seen since the early internet. The infrastructure focus, however unrealistic the timelines, addresses America's genuine competitive disadvantage against China's state-directed buildout.

But the plan's biggest weakness isn't its ambition—it's its internal contradictions. You can't demand "objective" AI while explicitly rejecting certain viewpoints. You can't promise to complement workers while eliminating every protection against displacement. You can't claim to prioritize innovation while conducting ideological purity tests on algorithms.

The real opportunity here isn't in the policy details. It's in the permission structure this creates for rapid experimentation. When the government says "build fast and break things," smart operators will do exactly that—while planning for the inevitable course corrections when reality intrudes.

The companies that win will be the ones that take the genuine strategic insights seriously while ignoring the political posturing. Focus on open-source capabilities. Build energy-efficient infrastructure. Prepare for export control complexity. And always remember: ambitious government plans create more opportunities than they destroy, even when they don't work as intended.

The bottom line

This plan represents the biggest bet on technological acceleration over regulatory caution in modern American history. It could unleash unprecedented innovation and cement US dominance in the defining technology of the century.

Or it could create massive infrastructure bottlenecks, legal chaos, and security vulnerabilities while burning through taxpayer money on impossible timelines.

The administration is gambling that speed trumps safety, that deregulation enables innovation, and that America can out-build China through pure determination. History will judge whether this was visionary leadership or expensive hubris.

Either way, buckle up. The next few years will be anything but boring.

Adapt & Create,

Kamil

I don't mean to be an extremist, but this policy creates a system where academic work is no longer judged solely on its rigor and merit, but on its political alignment. The policy also weaponizes the very digital tools we use for learning and turns them into instruments of surveillance and compliance.

As an educator who incorporated AI into my classrooms, I encourage students to use it for any and all assignments as a way to think critically about any problem before them.

I see it as effectively silencing any inquiry that challenges a specific, state-approved definition of "objective truth."

Buckle up, Buttercup! This is going to be an interesting ride! Thanks for your insights!