Get Better AI Answers with Context Engineering, Not Prompt Engineering

Why your ChatGPT chats degrade and what to do about it

Hey Adopter,

You’re three hours into a Claude conversation. Early responses were sharp. Now it’s forgetting things you said an hour ago. Mixing up details. Giving generic answers.

Your prompt didn’t get worse. Your context got polluted.

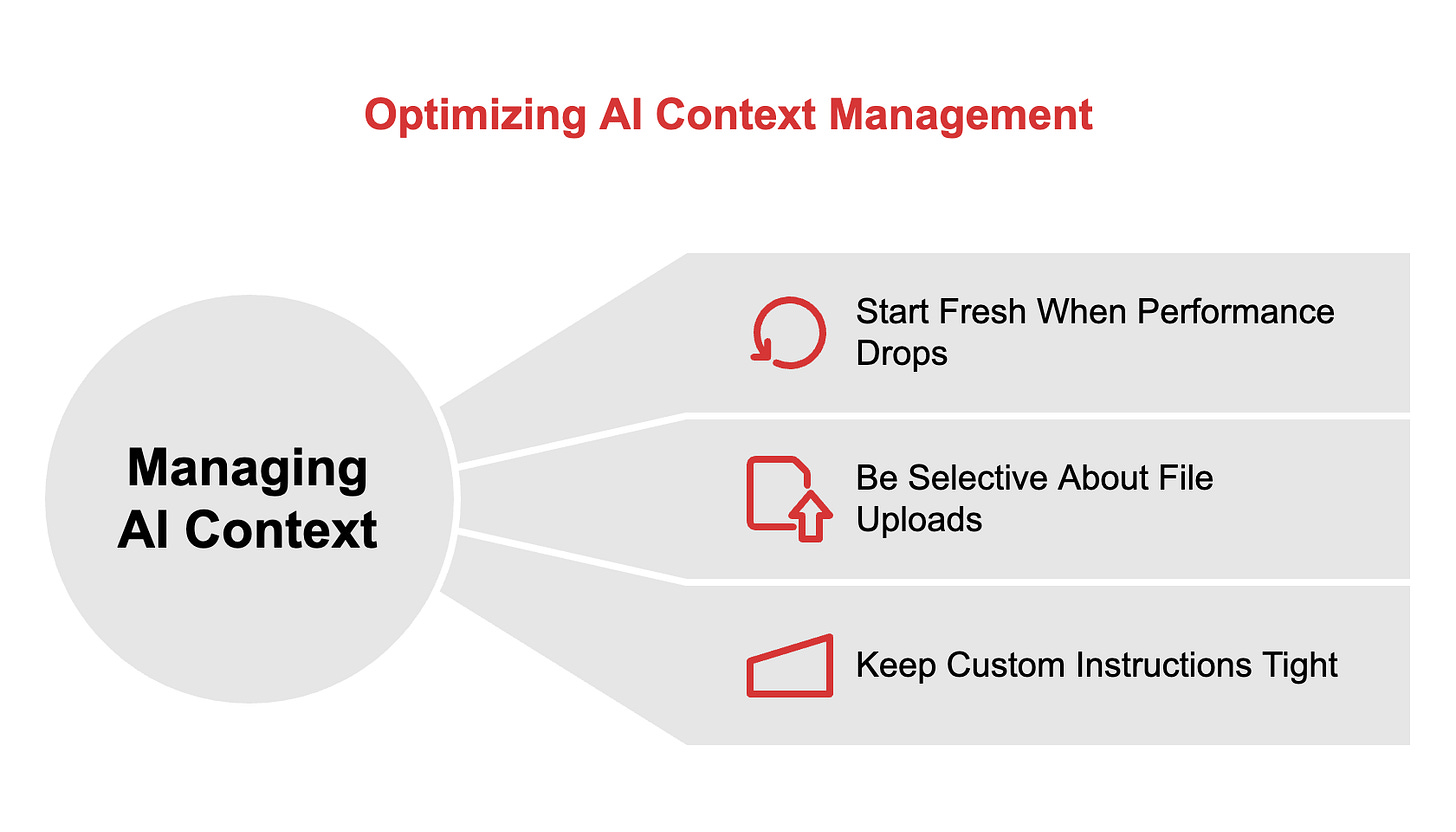

What you’ll learn: Why your conversations degrade. Three practical rules for managing what the model sees. When to start a new chat versus continuing an old one. How to set up Projects and custom GPTs without drowning the model in noise.

Who needs this: You use Claude Projects, ChatGPT, or custom GPTs daily. You upload files to conversations. You have long threads where you’re refining ideas. Performance starts strong but degrades. The model forgets context or gives worse answers as the chat continues.

Who can skip this: You write single prompts and move on. No file uploads. No multi-hour conversations. No Projects with knowledge bases. Your current setup works. Bookmark this for when it doesn’t.

Your chat has an attention-budget

Anthropic published research in September 2025 on what they call context engineering. The core finding: models don’t have infinite focus. They have an attention budget.

Every message in your chat history depletes it. Every uploaded file takes a cut. Every document in your Project knowledge base competes for the model’s working memory.

Feed Claude or ChatGPT 50,000 tokens of context. Ask it to recall something from 20 messages back. Performance drops. This is context rot. The model stretches thin. Recall gets fuzzy. Responses get generic.

A July 2025 study testing 18 models found that adding just one distractor document reduces performance. The effect compounds as conversations lengthen. May 2025 testing showed Claude 3.5 Sonnet’s performance falling from 29% to 3% as context grew.

You notice this when:

The model repeats suggestions you already rejected

It forgets details from earlier in the conversation

Answers become vaguer as the thread gets longer

It asks you to re-explain things you covered three hours ago

Was AI Adopters Club helpful to you? Drop us a testimonial!