Why Judgment Is Your New Career Currency (and how to train it)

Five critical skills that separate executives from executers when machines handle the predictions

Hey Adopter,

Read this to understand which five skills protect your career value when AI automates prediction, and how to build them before your competitors do.

The prediction economy just collapsed your advantage

CNBC reported today that AI will fully replace just 0.7% of job-related skills. Not the catastrophe predicted. The real disruption? Your technical skills just became table stakes.

Law firms prove the shift. Partners now draft contracts in 30 minutes using AI instead of delegating to junior associates. The junior who spent years mastering document structure has no moat. The partner who decides which contract terms to negotiate kept their job.

Ajay Agrawal’s research frames it cleanly: AI dominates prediction. Humans own judgment. The machines forecast outcomes. You stake your reputation on which forecast to trust and what action to take.

This isn’t about learning to prompt better. It’s about developing skills universities never taught you because they can’t be taught in lecture halls.

Where judgment actually matters

Judgment means choosing between options when data doesn’t decide for you. Three scenarios clarify the difference between prediction and judgment:

Product launch timing. AI models demand curves, competitor responses, manufacturing capacity. All predictions. You decide if launching into a recession with incomplete inventory is worth the first-mover advantage. That’s judgment.

Budget allocation. AI forecasts ROI across six initiatives. You choose to fund the lowest-ROI project because it builds capabilities for the next decade. That’s judgment informed by strategy, not just spreadsheets.

Hiring trade-offs. AI screens CVs and predicts performance. You hire the candidate with the messy background because they solved problems similar to yours. That’s judgment about what experience actually transfers.

Jakob Nielsen identifies judgment as the primary UX bottleneck: AI generates dozens of design variations, but humans ship the final version. Generative tools amplify output. Judgment determines what gets built.

The Brookings Institution research reinforces this: critical reasoning, independent thinking, and collaboration now matter more than technical execution. The work shifted upstream.

Trade-offs reveal your strategic position

AI expands your option set. Strategy is choosing what not to do.

IMD identified five AI trade-offs every business faces: speed versus safety, custom versus off-the-shelf, training workers versus replacing them. Pick wrong and your AI investment becomes expensive theatre.

Most teams handle trade-offs poorly. They say yes to everything, bloat the roadmap, then wonder why nothing ships. Michael Porter’s strategy framework still holds: competitive advantage comes from activities that reinforce each other because you explicitly chose not to serve certain customers or solve certain problems.

Your new role: articulate the trade-offs explicitly, get team agreement, then use AI to model the downstream effects of each choice. AI shows you the costs. You own the decision.

Scenario planning before competitors move

Writing got automated. The scarce work now? Thinking through opponent moves before they happen.

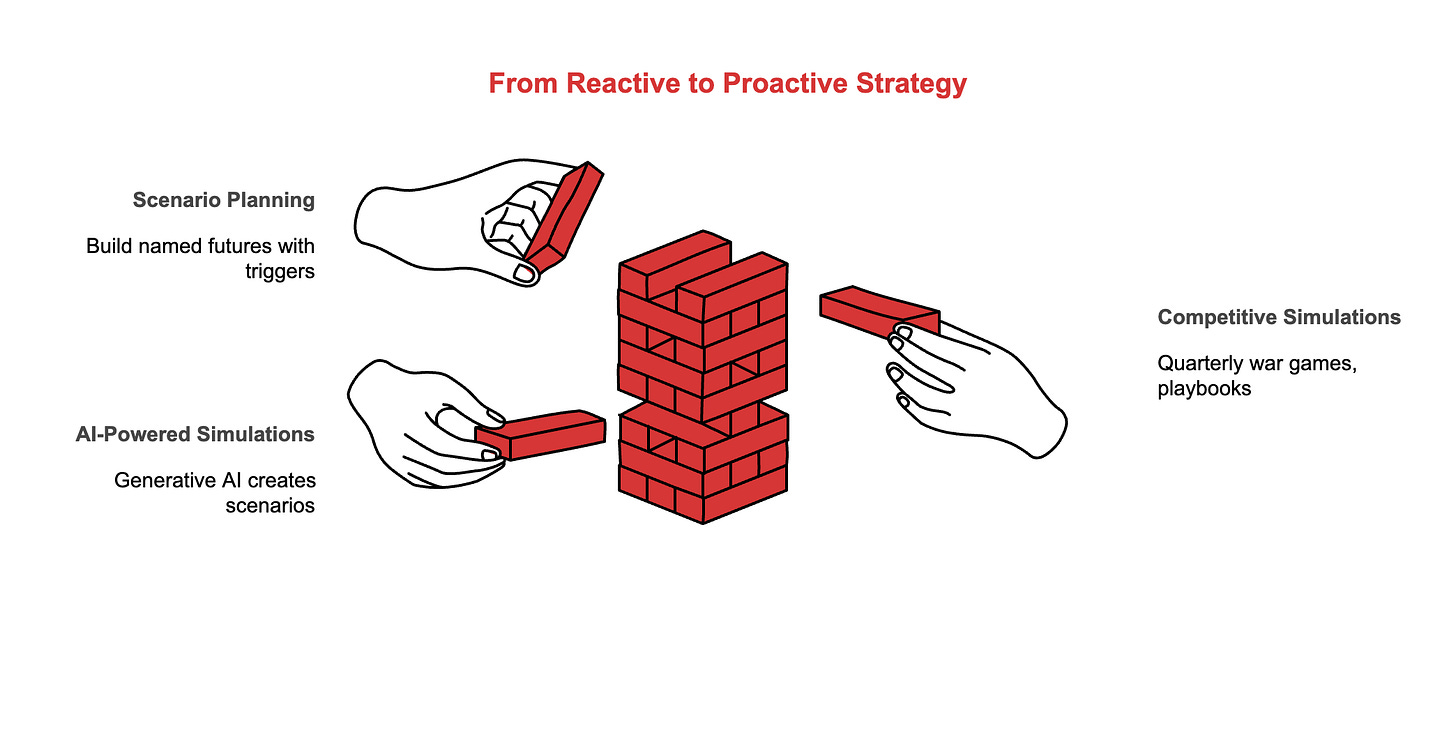

Kate Wade’s scenario discipline: build three named futures with triggers and pre-decided responses. Slow adoption, rapid disruption, regulatory shutdown. When a trigger fires, you already mapped the counter-moves.

McKinsey’s workforce planning research recommends quarterly competitive simulations. Assign teams to play rivals, regulators, and customers. Capture their moves into playbooks. This isn’t corporate roleplay. It’s stress-testing your strategy against intelligent opposition.

Workday’s 2025 analysis shows firms running thousands of disruption simulations using generative AI, then layering human judgment about regulatory constraints and talent availability. The machine generates scenarios. Humans pick which to prepare for.

One firm reported reducing strategic blindspots by 40% after implementing quarterly war games. The habit matters more than the specific scenario.

Build judgment through consequence, not theory

Universities struggle to teach judgment because they’re not designed for it. Judgment develops through making real decisions where you own the outcome and learn from the results.

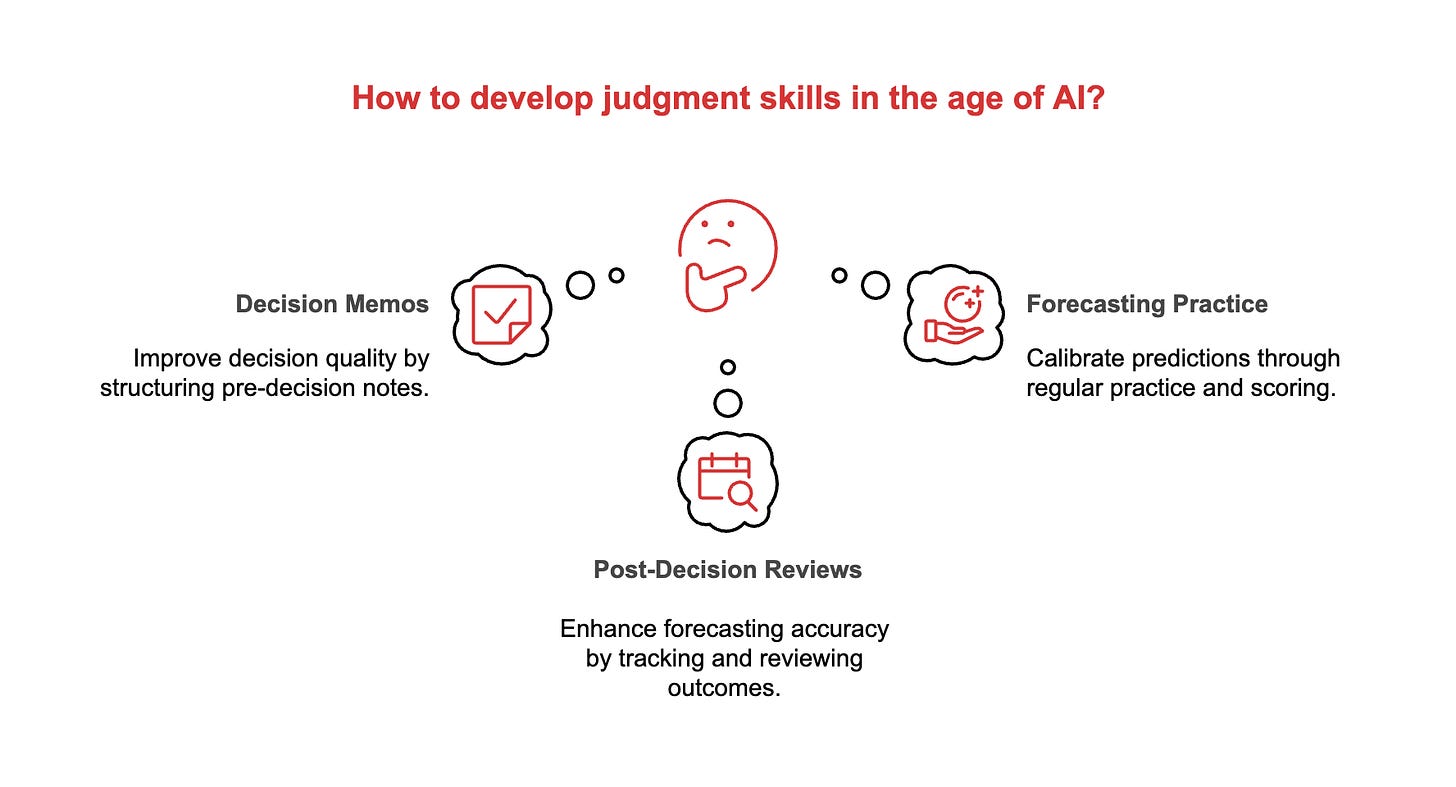

Three practical methods:

Decision memos before meetings. Harvard’s decision quality research shows structured pre-decision notes improve outcomes. One page: problem, options, risks, your recommendation with reasoning. Let AI generate the options and base rates. You assign probabilities and decide.

Post-decision reviews. Good Judgment Project proved that forecasters who track their accuracy improve 30% faster than those who don’t. Log major decisions with expected outcomes. Review quarterly. Adjust your mental models based on what actually happened.

Forecasting practice. Run monthly prediction rounds on business questions. AI provides base rates and arguments. You assign probabilities, keep Brier scores, extract lessons. The scoring matters because it forces calibration.

USC research warns that over-reliance on AI atrophies your decision muscle. The solution isn’t rejecting AI. It’s maintaining the habit of explicit reasoning about why you trust or override the model.

Manage AI like an executive manages staff

Your job title might not say executive. Your actual work increasingly resembles portfolio management.

Sundar Pichai frames it as amplification, not replacement: AI increases what you can accomplish, but you still direct the work, set priorities, and integrate outputs into coherent plans.

Three shifts in mindset:

From task completion to task orchestration. You don’t write the first draft anymore. You structure the brief, review options, edit for strategic fit, then ship. McKinsey’s AI adoption research found power users spend 60% less time on execution and 40% more time on direction and quality control.

From individual contributor to portfolio manager. You maintain multiple AI workflows simultaneously. Each needs rules, quality checks, and periodic retraining. Treat them like direct reports: clear objectives, regular reviews, performance metrics.

From subject matter expert to judgment expert. Your technical knowledge still matters for context. Your judgment about goals, trade-offs, and acceptable risks matters more. Ethan Mollick’s leadership framework recommends: leadership sets rules for safe use, lab teams run controlled experiments, then scale what works across the organisation.

The half-life of technical skills dropped to two years. The half-life of judgment? Your entire career.

Three questions that reveal judgment strength

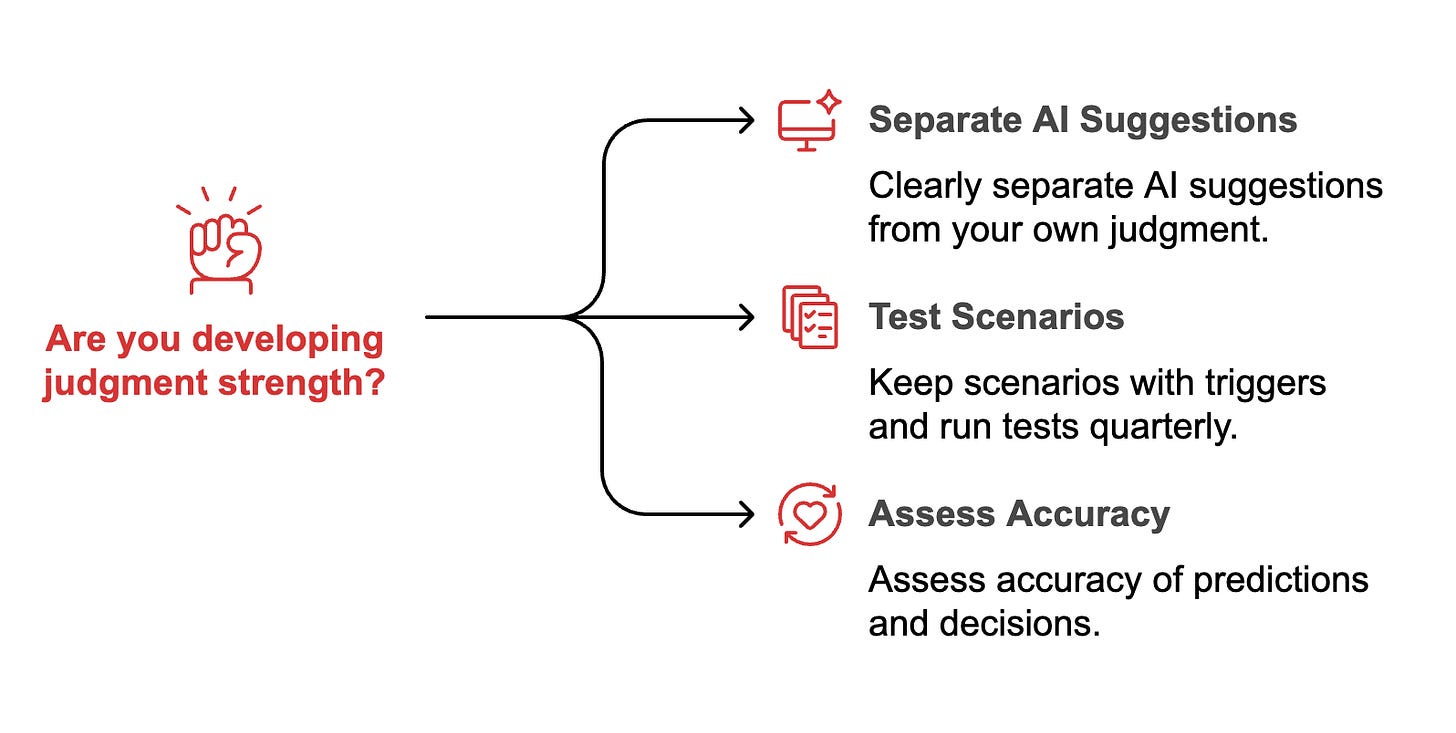

Stop reading now if you want to keep doing things the way you've always done them. These questions will help you see if you’re developing important skills or just doing tasks that AI can already handle better:

When you use AI for work, do you clearly separate what the AI suggests from your own judgment about goals and priorities? Do you write down your reasoning?

Do you keep scenarios with triggers and pre-planned actions, and do you run at least one test or simulation each quarter?

Do you assess how accurate your predictions and decisions are? Do you review what went well or wrong afterward and change your approach based on what you learn?

If you answered no to all three, start by making decision memos. Pick an upcoming decision. Let AI suggest options and possible counterpoints. Then, make your choice and write down your reasoning. This practice will strengthen your judgement skills, which are worth developing.

Machines crunch odds. You stake claims. That distinction determines who survives the next decade of AI disruption.

Adapt & Create,

Kamil