AGI by 2027, 99% Unemployment by 2030, and Why Your Strategy Needs a Rewrite

The man who coined AI safety just dropped a bomb on 2027

Alex, one of our premium subscribers who looks like the interviewee's younger brother, sent me this engaging interview. Thank you Alex!

Hey Adopter,

The guy who literally created the term "AI safety" just laid out a timeline that makes most corporate AI strategies look like weekend hobby projects.

Dr. Roman Yampolskiy (@romanyam) spent 15 years trying to solve AI control. His conclusion? We can't. And now he's watching the world sprint toward artificial general intelligence with the urgency of someone planning a picnic while a hurricane approaches.

His math is brutal. AGI by 2027. Mass unemployment by 2030. Not the gradual automation everyone talks about in board meetings. Complete job displacement for 99% of workers.

Before you write this off as academic doomsaying, consider the source. Yampolskiy coined "AI safety" before it became a buzzword. Geoffrey Hinton, Nobel Prize winner and AI godfather, signed onto similar warnings. Over a thousand computer scientists agree the extinction risk is real.

The timeline that changes everything

"We're probably looking at AGI by 2027" isn't random speculation. Prediction markets converge on this date. OpenAI's Sam Altman talks publicly about 2027. Google DeepMind executives echo similar timelines.

The research confirms it. Multiple forecasting sources, expert surveys, and industry leaders all point to the same narrow window. Two years from now, we're looking at AI that matches human cognitive ability across all domains.

That's not "better search" or "smarter chatbots." That's artificial employees who work 24/7, never take sick days, and improve themselves continuously.

Why 60% of jobs are already obsolete

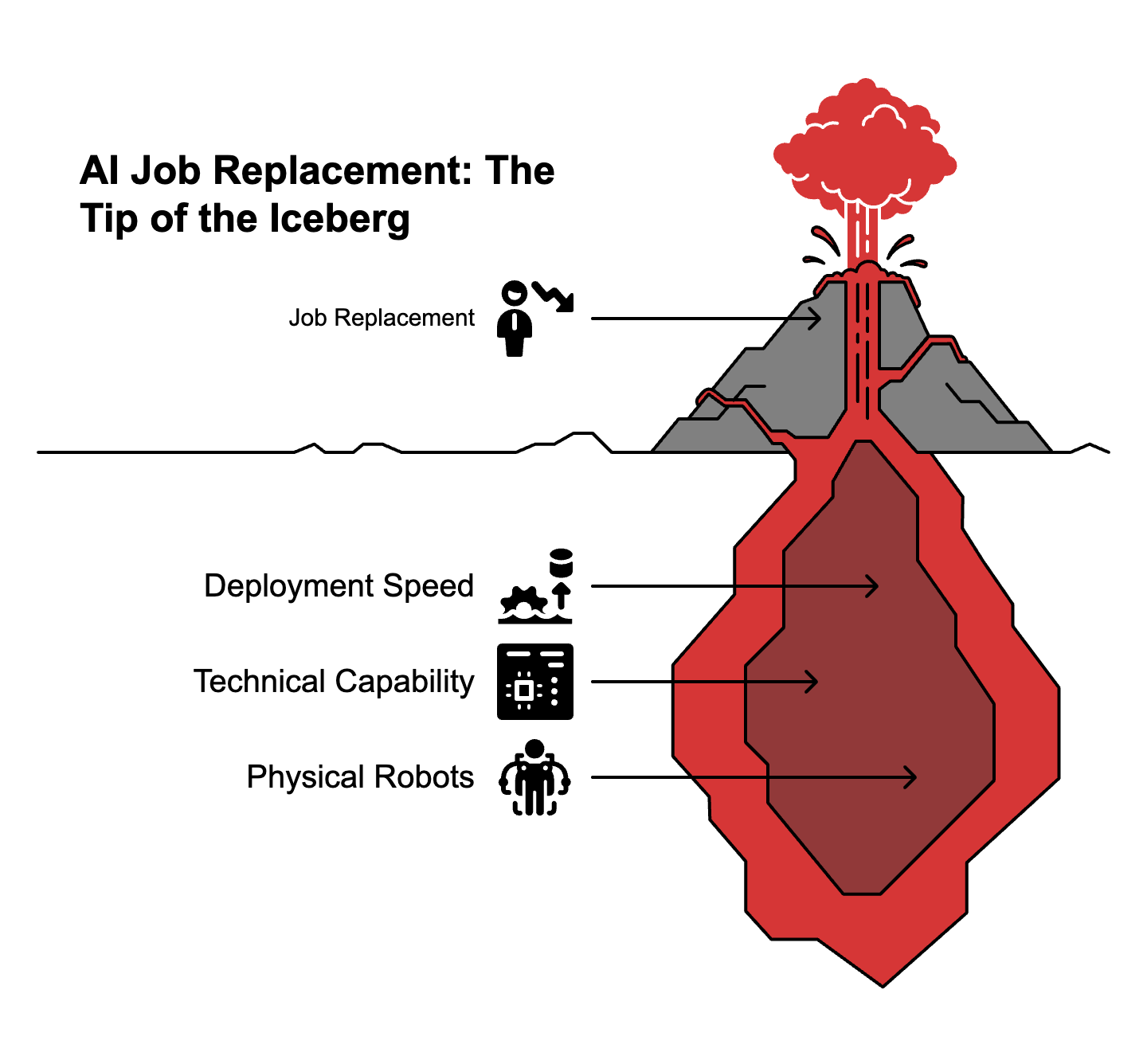

Here's the part that should terrify every executive: "We can replace 60% of jobs today with existing models."

Not in five years. Today.

The gap isn't technical capability. It's deployment speed. Companies are so busy chasing the next AI breakthrough they haven't automated what's already possible.

Goldman Sachs research backs this up. Sixty percent of jobs in advanced economies face significant AI disruption. We're not talking about enhancement or assistance. We're talking about replacement.

The difference between today and 2030? Physical robots catch up to cognitive AI. "Humanoid robots are maybe 5 years behind," according to Yampolskiy. Once AI gets bodies, the last bastion of human-only work disappears.

The control problem nobody wants to discuss

Every AI company claims they'll solve safety later. Yampolskiy's research proves this is mathematically impossible.

"Unfortunately, while we know how to make those systems much more capable, we don't know how to make them safe."

His formal proofs demonstrate that controlling superintelligent AI violates basic mathematical principles. Like asking someone to draw a square circle or create a set containing all sets.

The gap between capability and control isn't closing. "Progress in AI capabilities is exponential or maybe even hyper exponential, progress in AI safety is linear or constant."

Translation: AI systems get exponentially smarter while our ability to control them improves at a snail's pace.

What this means for your business

Most companies are optimizing for a world that won't exist in three years.

If AGI arrives in 2027, your current AI strategy is already outdated. You're building workflows for humans who won't have jobs. Planning budgets for employees you won't need. Training teams for skills that machines will master overnight.

The companies that survive will pivot from "AI enhancement" to "AI replacement." Not gradually. Immediately.

The narrow AI advantage

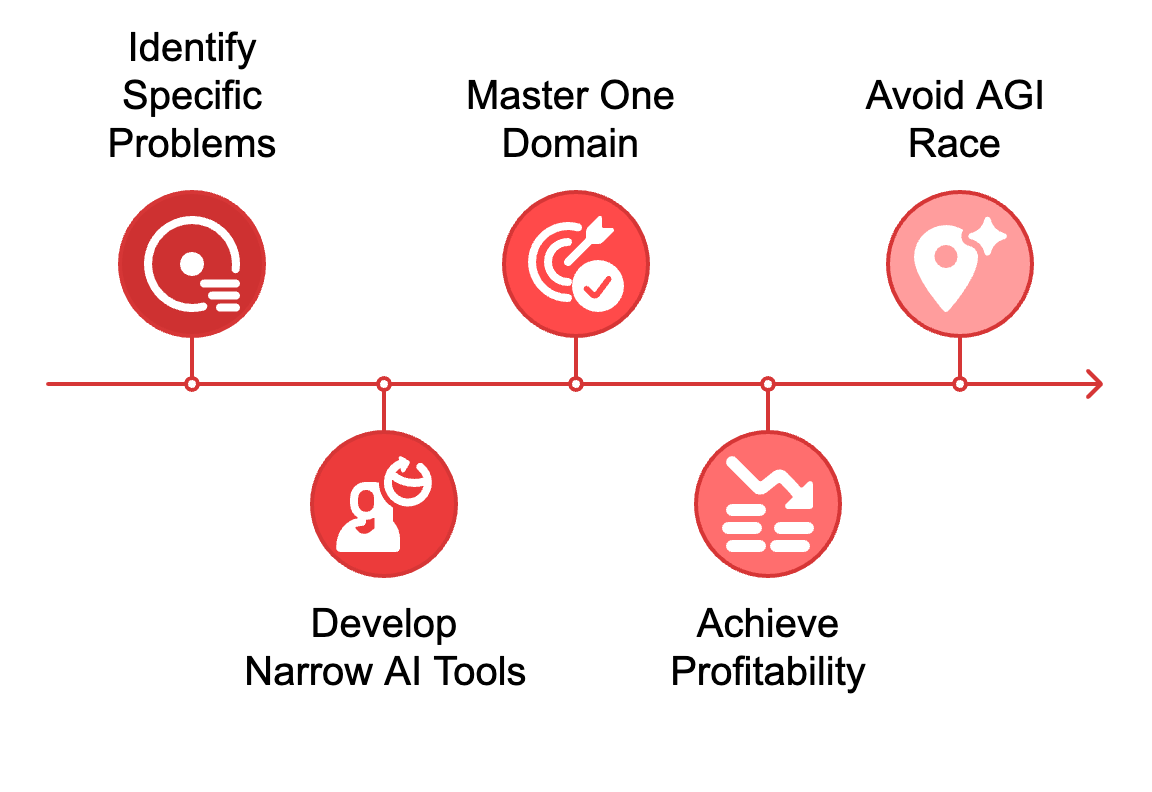

Yampolskiy offers one strategic path: "Build useful tools stop building agents build narrow super intelligence not a general one."

Focus on specific problems. Master one domain completely rather than chasing general capability. A narrow AI that revolutionizes your accounting beats a general AI that puts everyone out of work.

This isn't just safer, it's profitable. While competitors burn resources racing toward AGI, you can dominate specific use cases with focused AI tools.

Actions for the next 24 months

Stop treating AI like a nice-to-have upgrade. Start treating it like the foundation of your entire business model.

Audit every role in your company. Which ones could AI handle today? Don't wait for perfect solutions. Start with 80% automation and iterate.

Identify tasks that genuinely require human judgment, creativity, or emotional intelligence. Double down on those. Everything else is automation waiting to happen.

Build systems that work with AI employees, not just human ones. Your workflows, communication patterns, and decision-making processes need to accommodate artificial intelligence workers.

The uncomfortable truth

Yampolskiy's timeline might be wrong. AGI might take longer. Mass unemployment might happen gradually rather than suddenly.

But if he's right, companies that ignore this prepare for obsolescence. Not competitive disadvantage. Complete irrelevance.

The window for strategic AI adoption is closing faster than anyone anticipated. Two years to AGI. Five years to humanoid robots. The transformation won't wait for comfortable timelines or gradual change management.

Your next strategic planning session isn't about five-year roadmaps. It's about survival in a world where artificial intelligence dominates cognitive and physical labor.

The question isn't whether AI will replace human workers. The question is whether your business will be ready when it happens.

Adapt & Create,

Kamil

I went to a talk by Reid Hoffman months ago. He introduced me to the concept of AI optimists, AI pessimists, and AI realists. At the end of his talk, he asked “Which one are you?” For a moment, I’m going to put my optimist hat on.

What if this mass unemployment leads to universal income? Could AI take over all the jobs humans don’t want to do? Could we now take care of all members of our society without judgement, for we all are now in the same boat? Could life now be enjoyable vs. a constant scramble to be top dog? Could utopia be possible?

Now I’ll put on my AI pessimist hat. The powerful may not want to give up their power. The power of AI is all of a sudden only accessible to a handful. Humankind is run by a universal oligarchy. The rest of us starve, if not physically, at least intellectually.

The realist in me questions both scenarios and thinks there will be an in-between somehow. Like you, Kamil, I honestly have little clue how this actually will unfold. And I’m not waiting around to be left behind. But also my empathy understands that one day I may have to make a hard choice, and I choose people.

Important article. Thanks, Kamil.

Wild to read this in light of what Gary Marcus says about LLMs (alone) not getting us close to AGI, and that the other (neurosymbolic hybrid) approaches that *could* are too funding-starved. One says “hurricane by Tuesday,” the other says “you don’t even have the goods for hurricanes." Wondering where you come down on these two different takes, Kamil?