3 Ways to Spot Fake Photos at Work

Three forensic checks that protect your professional reputation

Hey Adopter,

Last month, a senior military officer received a social media message claiming four soldiers had been captured. The demand was simple: meet our terms in ten minutes or they die. The only proof? A single grainy photograph.

That photo was completely fabricated by AI.

Hany Farid, a digital forensics expert with three decades of experience, receives calls like this daily now. What used to be monthly requests from courts and governments has exploded into constant verification work. The reason is obvious. AI can now generate any image you can describe in seconds.

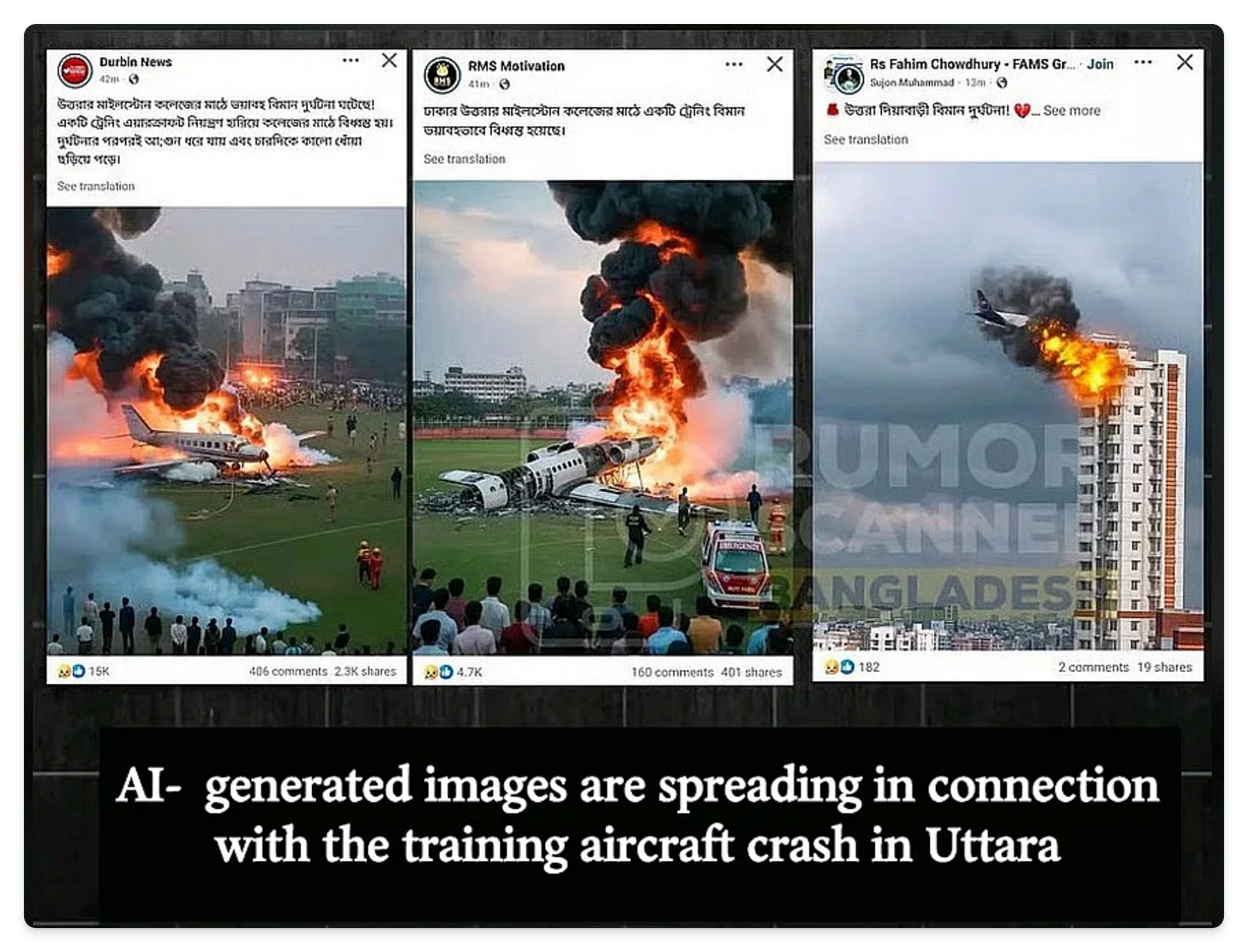

Your colleagues are forwarding these fakes in Slack channels. Your clients are basing decisions on synthetic evidence. Your boss just shared that "breaking news" photo that never actually happened.

The stakes extend far beyond embarrassment.

Why smart professionals verify first

Companies are losing millions to AI-generated CEO impersonators. Medical misinformation spreads through fabricated imagery. Even your quarterly presentations could accidentally include fake supporting visuals pulled from image searches.

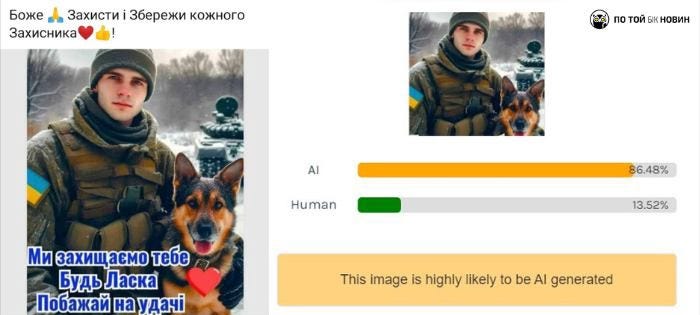

Social media platforms prioritize engagement over accuracy. Research shows that on platforms like Twitter, fake content can approach 50% of all images shared. Each share multiplies the damage.

But here's what most people miss: AI-generated images still leave mathematical fingerprints that trained eyes can spot. The technology that creates these fakes also betrays them.

Three forensic techniques you can learn today

Professional forensic analysts use sophisticated tools, but the core principles work with basic image editing software. Master these three checks and you'll never be the person who shared obvious fakes in the company chat.

Check one: examine the noise patterns

Natural photographs capture light hitting camera sensors. This process creates random electronic noise throughout the image. AI generation works completely differently, building images from statistical patterns learned from training data.

When you analyze the residual noise in an AI image using Fourier transform analysis, distinct "star-like" patterns emerge. Real photos show diffuse, random noise. Synthetic images reveal geometric artifacts that follow the mathematical structure of their generation process.

You don't need specialized software for basic checks. Open any suspect image in a photo editor and examine fine details at maximum zoom. Look for subtle inconsistencies in grain patterns, especially in areas that should have uniform texture like skin or sky.

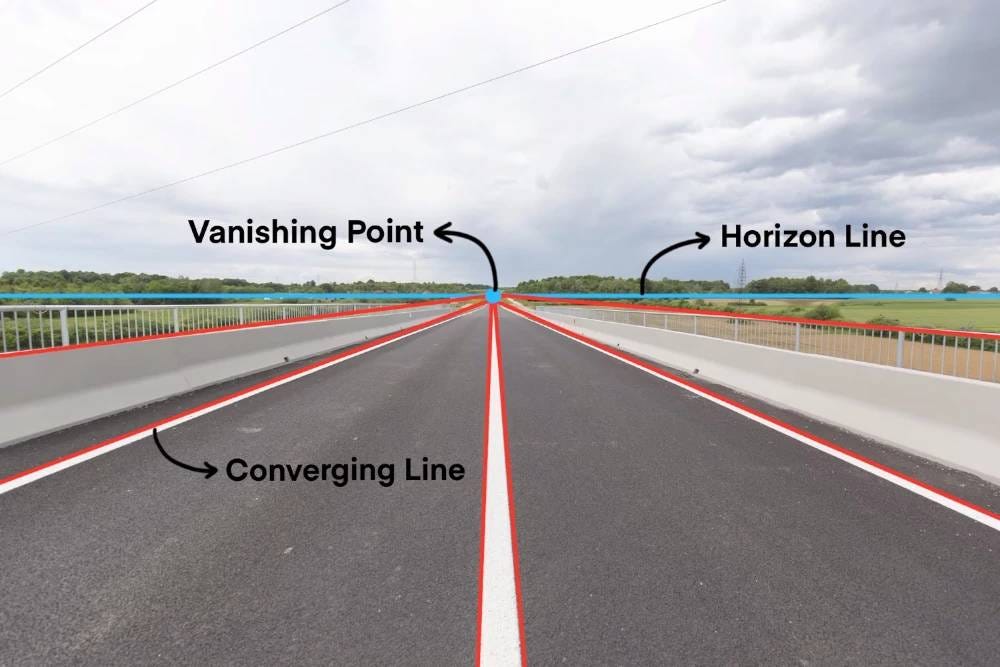

Check two: trace the vanishing points

Artists learned this rule centuries ago: parallel lines in three-dimensional space converge to a single point on the horizon. Railroad tracks, building edges, table corners. Physics demands consistency.

AI lacks true spatial understanding. It paints pixels based on pattern recognition, not geometric rules. The result? Images where parallel lines refuse to meet at common vanishing points.

Import any suspicious photo into a drawing program. Trace the major architectural lines that should be parallel. Extend them across the image. In authentic photos, these lines intersect at consistent horizon points. In AI fakes, they scatter randomly or create impossible geometries.

Farid's analysis of the fake soldiers photo revealed walls and ceiling lines that violated basic perspective rules. The basement that looked convincing at first glance became obviously synthetic when measured against geometric reality.

Check three: follow the shadows

Every shadow tells a story about light sources. In natural scenes lit by the sun or a single lamp, you can draw lines from any object through its shadow tip back to the light source. Those lines must intersect at one point.

AI struggles with this physics constraint. Shadow analysis reveals inconsistent lighting that exposes synthetic origins. One object might cast shadows suggesting sunlight from the east while another object in the same scene shows shadows from overhead lighting.

The technique is simple. Pick two distinct objects with clear shadows in the image. Draw lines from the object base through the shadow tip. If those lines diverge instead of meeting at a plausible light source location, you're looking at a fake.

The metadata reality check

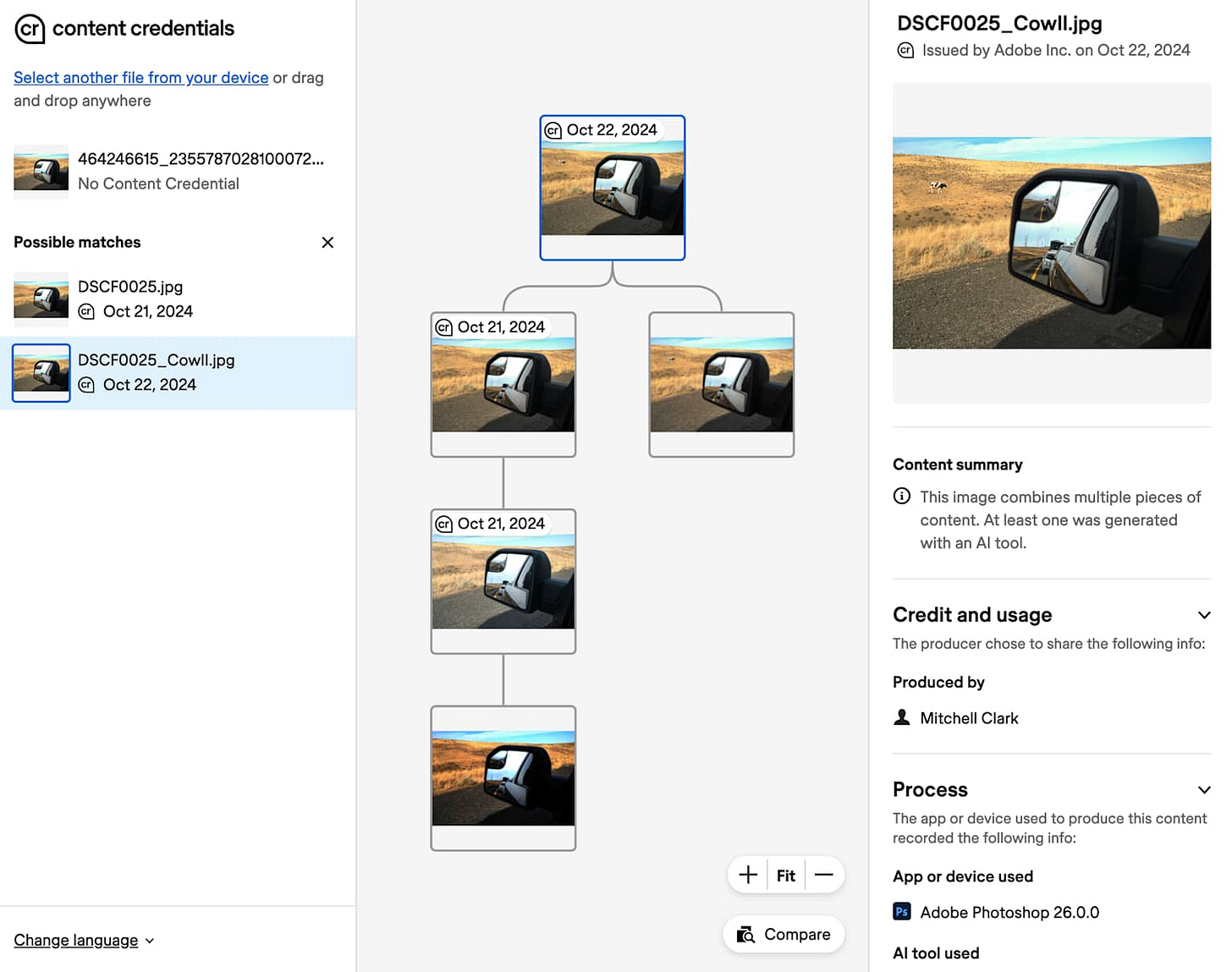

Modern authentication goes beyond visual analysis. The Content Authenticity Initiative has developed cryptographic standards that embed tamper-evident credentials into images at creation.

These "content credentials" function like digital birth certificates. They record who created the image, what edits were made, and whether AI was involved in generation. Professional cameras and editing software increasingly support this standard.

Visit contentcredentials.org/verify and drag any image file onto their checker. Legitimate photos from participating platforms will display their full creation and editing history. Missing credentials don't prove fakery, but their presence confirms authenticity.

Social media platforms often strip this metadata during upload, which creates verification challenges. However, the absence of credentials on professional imagery should raise immediate suspicion.

Building verification habits that matter

You can't forensically analyze every image that crosses your screen. Focus your attention where it counts most.

Pause before forwarding breaking news images. Run basic reverse image searches using Google's image search to check if the photo appears in credible news sources. Look for contextual red flags like emotional manipulation, political timing, or sources you don't recognize.

When client presentations include supporting imagery, verify the sources. That powerful case study photo could be synthetic. Your quarterly report shouldn't accidentally promote fabricated evidence to stakeholders.

Train your team to approach viral images with healthy skepticism. The most shareable content often carries the highest misinformation risk. Create simple verification protocols before company social media accounts amplify questionable imagery.

The arms race accelerates

Detection methods improve, but so do generation techniques. New AI models reduce some visual artifacts that current forensic tools rely on. Hybrid approaches that edit real photos with AI elements complicate analysis further

.

The solution isn't perfect technical detection. It's building organizational cultures that value verification over viral sharing. Companies that prioritize accuracy over speed in their information sharing will maintain credibility while competitors fall for obvious fakes.

Professional forensic tools are becoming available to newsrooms and legal teams. These specialized systems combine multiple detection methods for higher confidence verdicts. But the basic principles remain accessible to anyone willing to look more carefully at the images they encounter.

The age of synthetic media

Most professionals remain oblivious to the scale of synthetic imagery flooding their information streams. They share without questioning. They trust without verifying. They build presentations on fabricated foundations.

You now understand the mathematical signatures that expose AI fakes. You know the geometric rules that synthetic images violate. You can trace shadows that reveal impossible lighting. You understand how content credentials provide cryptographic proof of authenticity.

This knowledge makes you valuable to every organization you touch. Clients need advisors who won't be fooled by synthetic evidence. Teams need leaders who can spot misinformation before it damages reputations. Companies need professionals who understand the new reality of digital trust.

The next time a suspicious image lands in your inbox, you'll know exactly what to check. Your colleagues will forward fakes. Your competitors will fall for obvious scams. You'll be the person who caught the fake before it fooled everyone else.

That's the kind of professional people remember.

Adapt & Create,

Kamil

The scenario in Harry Farid’s TED talk is from his talk in April. It was a scenario, a work of fiction, putting the audience in the role of senior military official. It did not happen.

Your post, however, shows how easily one will repost fiction as truth. This is not new. It is not exclusive to AI content. However, it is rampant. The irony that this post begins with fiction presented as truth while purportedly advising others on how to spot fakes is hilariously ironic.

At the end of the talk, Faird gives a few bits of advice. Perhaps we all should pay attention to this one: “Understand that when you share false or misleading information, intentionally or not, you're all part of the problem. Don't be part of the problem.”